Probabilistic error cancellation with shaded lightcones¶

Background¶

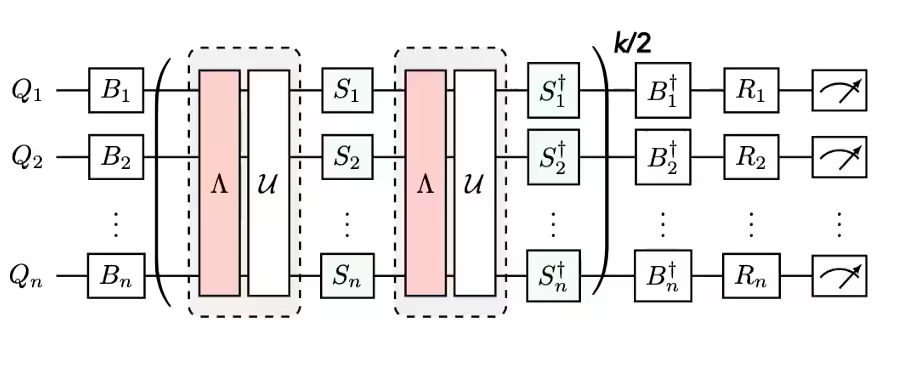

This tutorial demonstrates how to mitigate errors by using the Shaded lightcone (SLC) addon. This addon is an evolution of the probabilistic error cancellation (PEC) technique, wherein a user learns the noise of unique layers in a circuit and then cancels out the noise by applying single-qubit gates and post-processing techniques. Compared to other methods, PEC offers more robust bounds on the bias of the mitigated result, but tends to suffer from a higher overhead in terms of QPU time. During PEC, to compensate for attenuation of the expectation value by noise, the average result is rescaled by a factor of \(\gamma = \exp(\sum_{l,\sigma} 2\lambda_{l,\sigma})\), where \(\lambda_{l,\sigma}\) is the learned noise rate of error Pauli \(\sigma\) at layer \(l\) in the circuit. This rescaling increases the variance by a factor of \(\gamma^2\), and thus also multiplies the number of circuit executions needed on the QPU by \(\gamma^2\), which we call the sampling cost or sampling overhead. Because \(\gamma\) grows exponentially, PEC is often limited to shallow or few-qubit circuits. Learn more about PEC in Probabilistic error cancellation with sparse Pauli-Lindblad models on noisy quantum processors.

If we can identify errors that do not need to be mitigated, we can decrease this sampling cost exponentially. A first step in this direction is implementing locally aware error mitigation, which uses a quickly computable conventional “lightcone” to reduce the PEC overhead by bounding an observable’s sensitivity to errors throughout the circuit, extending the feasibility of PEC to larger scales for some problems. Errors outside of this lightcone cannot affect the measured outcome and can therefore be excluded from the error cancellation procedure. This exclusion decreases the sampling overhead, in some cases substantially, without introducing additional bias. In particular, for measuring a local observable \(O\) of a fixed-depth circuit, the required sampling overhead eventually plateaus when scaling the number of qubits in the circuit (see Fig. 2b in Locality and Error Mitigation of Quantum Circuits.)

Shaded lightcones (SLC) go further, using classical simulations to more tightly bound the sensitivity to errors throughout the circuit. This trades some QPU time for CPU time and reduces the sampling overhead needed to renormalize the bias. Instead of a hard cutoff, each potential error in the circuit is assigned a graded “shade” that upper-bounds the susceptibility of the observable to that error. This refined characterization allows for more efficient, targeted applications of PEC with reduced variance, while giving the user the ability to controllably tune the bias in the observable estimation. See Lightcone shading for classically accelerated quantum error mitigation for more details.

Our workflow for the SLC addon leverages the new Samplomatic and Executor framework, allowing users to have more modular control of execution settings for error suppression and mitigation while retaining ease of use for advanced users. For a deeper understanding of the benefits of this framework and its general features, refer to the Hello samplomatic tutorial.

Workflow for lightcone shading, noise learning, and anti-noise injection¶

For modeling the QPU’s noise, we chose to use a sparse Pauli-Lindblad noise model with 1- and 2-qubit Pauli error rates, locally generated on each qubit and edge of the device. With this choice, the SLC error-mitigation workflow presented in this tutorial is as follows:

CPU — Bound per-error impact of 1- and 2-qubit Pauli errors

Forward propagation (bound effect on observable). Propagate each error to the end of the circuit and compute its commutator with the observable.

Truncate operator terms during evolution to keep computation tractable.

Further tighten these bounds by a loose back-propagation of the observable based on quantum speed limits.

Backward propagation (bound effect on initial state). Propagate each error to the start of the circuit and compute its commutator with the initial state.

QPU — Learn noise rates. Use

NoiseLearnerto estimate rates of the Pauli-Lindblad noise model.CPU — Prioritize mitigation

Update merged bounds with learned noise rates. Combine forward and backward bounds that were previously computed and update them with learned noise rates.

Rank noise components to mitigate by using the computed bounds and learned rates. Prioritize each possible noise error based on its estimated impact on bias and the associated expense to correct.

QPU — Insert antinoise and run. Execute the circuit of interest with antinoise (inverse noise) specified by using

Boxannotations.CPU — Estimate observable. Compute the expectation value, applying measurement-based post-selection to reduce non-Markovian noise impact.

Noise learning overview¶

Noise learning is a common step in several error-mitigation methods, carried out by the NoiseLearner, and can be seen in our PEA error mitigation tutorial, as well as our Propagated noise absorption (PNA) tutorial.

In NoiseLearnerV3, a user can specifically identify the to-be-learned noise layers as `CircuitInstruction <https://quantum.cloud.ibm.com/docs/api/qiskit/qiskit.circuit.CircuitInstruction>`__ objects, which allows users to compute the desired SLC noise bounds for each layer in the manner described above. The learned Pauli-Lindblad model provides coefficients to be used in the PEC-SLC prioritization. The way in which the gates are collected into layers can be determined by using

generate_boxing_pass_manager and unique_2q_instructions convenience functions, and then fed into the SLC utility function generate_noise_model_paulis, as described in Step 2 below.

Part 1 |

Part 2 |

Part 3 |

|---|---|---|

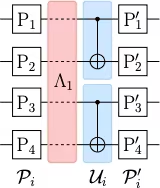

Pauli-twirl two-qubit gate layers |

Repeat identity pairs of layers and learn noise |

Derive a fidelity (error for each noise channel) |

|

|

|

Post-processing overview¶

After executing on quantum hardware by using the Samplomatic and Executor framework, we convert our bitstring measurements into the desired observable value. In the case of our mirrored Ising circuit, we will ideally get a measured observable of 1, as all qubits should ideally return to their starting point of \(\ket{0}\). When computing the observable value with our expectation_values function, we will apply a few post-processing techniques that reduce noise impact. This includes

removing shots affected by non-Markovian noise, readout-error mitigation, as well as accounting for details of our PEC implementation. Details are discussed in Step 4 below.

Requirements¶

Before starting this tutorial, ensure that you have the following packages installed:

Qiskit Runtime Executor Preview and SDK (

pip install "qiskit-ibm-runtime @ git+https://github.com/Qiskit/qiskit-ibm-runtime.git@executor_preview")Qiskit addon Shaded lightcone 0.1 (

pip install "qiskit-addon-slc~=0.1.0”)Qiskit addon utils (

pip install "qiskit-addon-utils~=0.3.0")Samplomatic v0.13 or more(

pip install samplomatic)Qiskit Visualization support (

pip install "qiskit[visualization]")

Step 0. Setup¶

First, import the packages and functions needed to run this notebook successfully.

[1]:

import logging

logging.basicConfig(level=logging.INFO, format="%(asctime)s %(levelname)s %(module)s %(message)s")

# Setting this value prevents itertools.starmap deadlock on UNIX systems

from multiprocessing import set_start_method

set_start_method("spawn")

# Needed to prevent PySCF from parallelizing internally (SLC only)

%set_env OMP_NUM_THREADS=1

env: OMP_NUM_THREADS=1

[63]:

import pickle

import numpy as np

import samplomatic

from matplotlib import pyplot as plt

from qiskit import QuantumCircuit

from qiskit.quantum_info import SparsePauliOp

from qiskit.transpiler import PassManager, generate_preset_pass_manager

from qiskit_addon_slc.bounds import (

compute_backward_bounds,

compute_forward_bounds,

compute_local_scales,

merge_bounds,

tighten_with_speed_limit,

)

from qiskit_addon_slc.utils import generate_noise_model_paulis, map_modifier_ref_to_ref

from qiskit_addon_slc.visualization import draw_shaded_lightcone

from qiskit_addon_utils.exp_vals.expectation_values import executor_expectation_values

from qiskit_addon_utils.exp_vals.measurement_bases import get_measurement_bases

from qiskit_addon_utils.noise_management import gamma_from_noisy_boxes, trex_factors

from qiskit_addon_utils.noise_management.post_selection import PostSelector

from qiskit_addon_utils.noise_management.post_selection.transpiler.passes import (

AddPostSelectionMeasures,

AddSpectatorMeasures,

)

from qiskit_ibm_runtime import Executor, QiskitRuntimeService, QuantumProgram

from qiskit_ibm_runtime.noise_learner_v3 import NoiseLearnerV3

from qiskit_ibm_runtime.options import NoiseLearnerV3Options

from samplomatic.transpiler import generate_boxing_pass_manager

from samplomatic.utils import find_unique_box_instructions

Step 1. Map the problem¶

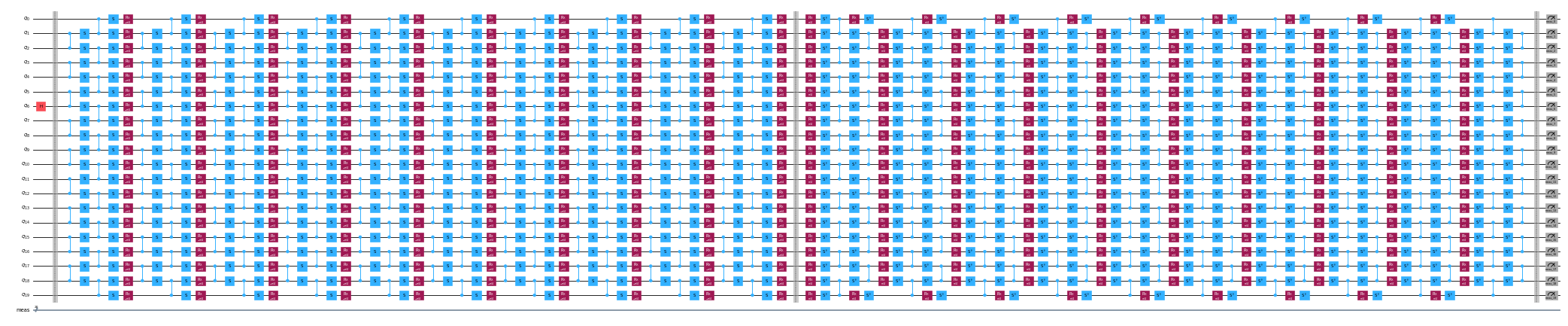

For ease of demonstration, we select a 1D mirror Ising chain. The 1D Ising chain gives a nicely dense circuit structure, which is convenient for showcasing PEC implementations. A mirror circuit makes it straightforward to know the expected outcome (namely, we should measure an observable of 1).

Further, we want to run a mirror circuit, so for every gate in the second half of the circuit, there needs to be an inverse gate in the first half. As the measured observable :math:`<X_6 Z_{13}>` has non-Z-basis measurements, and the executor accounts for the desired basis at the end of the circuit, we provide a prepare_basis function that inserts the appropriate gates at the start of the mirror circuit. This detail is specific to our mirror-circuit demonstration. The

get_measurement_bases function allows us to easily identify which gates are needed and where to append them, as well as keeping track of qubit index subtleties arising from conventions in box annotation as discussed in the “Prepare canonical bases measurements” section.

[3]:

num_qubits = 20

target_obs_sparse = [("XZ", [6, 13], 1.0)]

[4]:

observable = SparsePauliOp.from_sparse_list(target_obs_sparse, num_qubits=num_qubits)

[5]:

bases_virt, reverser_virt = get_measurement_bases(observable)

[6]:

num_trotter_steps = 10

rx_angle = np.pi / 4

[7]:

def construct_ising_circuit(

num_qubits: int, num_trotter_steps: int, rx_angle: float, barrier: bool = True

) -> QuantumCircuit:

circuit = QuantumCircuit(num_qubits)

for _step in range(num_trotter_steps):

circuit.rx(rx_angle, range(num_qubits))

if barrier:

circuit.barrier()

for first_qubit in (1, 2):

for idx in range(first_qubit, num_qubits, 2):

# equivalent to Rzz(-pi/2):

circuit.sdg([idx - 1, idx])

circuit.cz(idx - 1, idx)

if barrier:

circuit.barrier()

return circuit

def prepare_basis(circuit: QuantumCircuit, basis: list[int]) -> QuantumCircuit:

# basis is a list of integer values from 0 to 3. These map to the basis measurement as:

# 0 = I; 1 = Z; 2 = X; 3 = Y

assert len(basis) == circuit.num_qubits

out_circ = circuit.copy_empty_like()

for qb, bas in enumerate(basis):

if bas in {0, 1}:

continue

if bas == 2:

out_circ.h(qb)

elif bas == 3:

out_circ.rx(-np.pi / 2, qb)

out_circ.barrier()

out_circ.compose(circuit, inplace=True)

return out_circ

def mirror_circuit(circuit: QuantumCircuit, *, inverse_first: bool = False) -> QuantumCircuit:

mirror_circ = circuit.copy_empty_like()

mirror_circ.compose(circuit.inverse() if inverse_first else circuit, inplace=True)

mirror_circ.barrier()

mirror_circ.compose(circuit if inverse_first else circuit.inverse(), inplace=True)

mirror_circ.measure_active()

return mirror_circ

[8]:

# Instantiate circuit

circuit = construct_ising_circuit(num_qubits, num_trotter_steps, rx_angle, barrier=False)

mirrored_circuit = mirror_circuit(circuit, inverse_first=True)

mirrored_circuit = prepare_basis(mirrored_circuit, bases_virt[0])

[9]:

mirrored_circuit.draw("mpl", fold=-1, scale=0.3, idle_wires=False, measure_arrows=False)

[9]:

Step 2. Optimize¶

We will optimize details associated with the circuit to be run, the observable to be measured, and the noise-learning parameters. As a starting point, we ensure that we instantiate a backend with fractional gates turned on as an option. These fractional gates will allow for greater sensitivity in some of our post-selection filtering.

[ ]:

token = "<YOUR_TOKEN>"

instance = "<YOUR_INSTANCE>"

# This is used to retrieve shared results

shared_service = QiskitRuntimeService(

channel="ibm_quantum_platform",

token=token,

instance=instance,

)

# This is used to run on real hardware

service = service = QiskitRuntimeService()

qiskit_runtime_service._discover_account:WARNING:2025-11-10 11:19:40,108: Loading account with the given token. A saved account will not be used.

[11]:

backend = service.backend("ibm_kingston", use_fractional_gates=True)

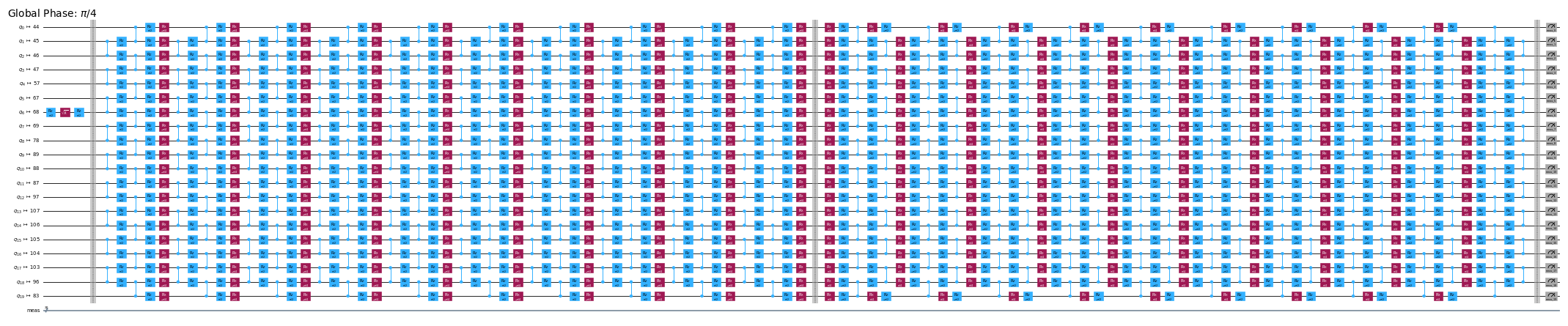

First, we will transpile our circuit to ISA instructions, as required for execution on our QPUs. For the data collected in this experiment, we hand select our qubits based on evaluation of the highest quality chain.

[12]:

layout = [44, 45, 46, 47, 57, 67, 68, 69, 78, 89, 88, 87, 97, 107, 106, 105, 104, 103, 96, 83]

[13]:

isa_pm = generate_preset_pass_manager(backend=backend, initial_layout=layout, optimization_level=0)

isa_circuit = isa_pm.run(mirrored_circuit)

assert isa_circuit.layout.final_index_layout() == layout

isa_observable = observable.apply_layout(layout, num_qubits=isa_circuit.num_qubits)

2025-11-10 11:19:57,810 INFO base_tasks Pass: ContainsInstruction - 0.00715 (ms)

2025-11-10 11:19:57,811 INFO base_tasks Pass: UnitarySynthesis - 0.00525 (ms)

2025-11-10 11:19:57,811 INFO base_tasks Pass: HighLevelSynthesis - 0.02599 (ms)

2025-11-10 11:19:57,811 INFO base_tasks Pass: BasisTranslator - 0.09131 (ms)

2025-11-10 11:19:57,811 INFO base_tasks Pass: SetLayout - 0.02623 (ms)

2025-11-10 11:19:57,812 INFO base_tasks Pass: FullAncillaAllocation - 0.14400 (ms)

2025-11-10 11:19:57,812 INFO base_tasks Pass: EnlargeWithAncilla - 0.06318 (ms)

2025-11-10 11:19:57,813 INFO base_tasks Pass: ApplyLayout - 0.29802 (ms)

2025-11-10 11:19:57,813 INFO base_tasks Pass: CheckMap - 0.07820 (ms)

2025-11-10 11:19:57,814 INFO base_tasks Pass: FilterOpNodes - 0.33283 (ms)

2025-11-10 11:19:57,814 INFO base_tasks Pass: UnitarySynthesis - 0.00691 (ms)

2025-11-10 11:19:57,814 INFO base_tasks Pass: HighLevelSynthesis - 0.13208 (ms)

2025-11-10 11:19:57,816 INFO base_tasks Pass: BasisTranslator - 1.00303 (ms)

2025-11-10 11:19:57,818 INFO base_tasks Pass: FoldRzzAngle - 1.78719 (ms)

2025-11-10 11:19:57,818 INFO base_tasks Pass: ContainsInstruction - 0.00691 (ms)

2025-11-10 11:19:57,818 INFO base_tasks Pass: InstructionDurationCheck - 0.00405 (ms)

[14]:

wire_order = layout + [q for q in range(isa_circuit.num_qubits) if q not in layout]

isa_circuit.draw(

"mpl", fold=-1, scale=0.3, idle_wires=False, wire_order=wire_order, measure_arrows=False

)

[14]:

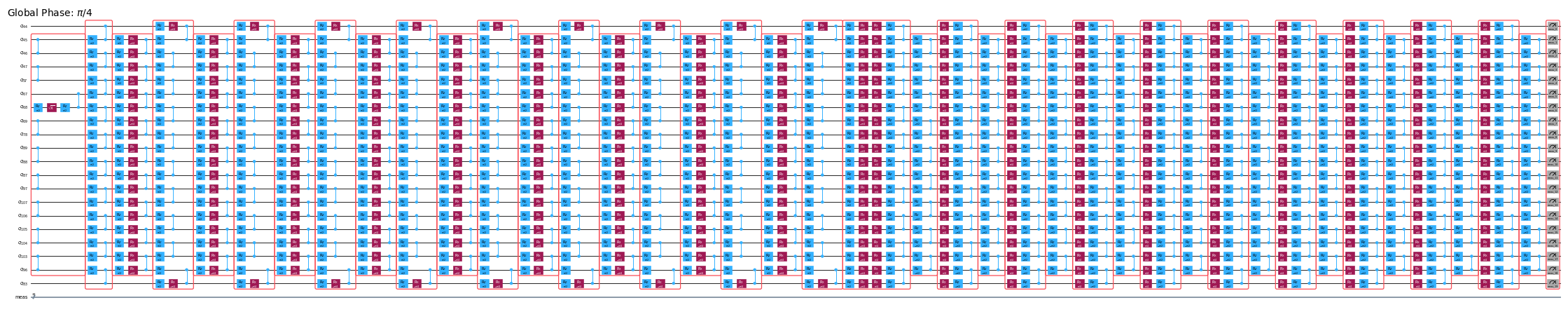

Box the circuit¶

For ease of implementation, we will utilize the generate_boxing_pass_manager transpilation pass, which places the circuit instructions into annotated boxes. These boxes clearly indicate where, in the case of PEC, antinoise should be injected into the circuit. For details on settings, refer to the Samplomatic documentation.

Note that the SLC workflow the use of inject_noise_strategy="individual_modification" later in the process because this allows us to uniquely identify each BoxOp in the circuit.

The find_unique_box_instructions function iterates through the provided boxed circuit and identifies those that have unique 2Q layers or measurements, for the purpose of noise learning and noise injection.

[ ]:

# Box circuit with Twirl and InjectNoise annotations

boxes_pm = generate_boxing_pass_manager(

twirling_strategy="active",

inject_noise_strategy="individual_modification",

inject_noise_targets="gates",

measure_annotations="all",

)

boxed_circuit = boxes_pm.run(isa_circuit)

# Find the unique instructions (layers) from boxed circuit

unique_2q_instructions = find_unique_box_instructions(

boxed_circuit, normalize_annotations=None, undress_boxes=True

)

2025-11-10 11:20:01,088 INFO base_tasks Pass: RemoveBarriers - 0.02289 (ms)

2025-11-10 11:20:01,100 INFO base_tasks Pass: GroupGatesIntoBoxes - 12.38990 (ms)

2025-11-10 11:20:01,101 INFO base_tasks Pass: GroupMeasIntoBoxes - 0.47898 (ms)

2025-11-10 11:20:01,104 INFO base_tasks Pass: AddTerminalRightDressedBoxes - 2.88177 (ms)

2025-11-10 11:20:01,111 INFO base_tasks Pass: AddInjectNoise - 6.66904 (ms)

[16]:

boxed_circuit.draw(

"mpl", fold=-1, scale=0.3, idle_wires=False, wire_order=wire_order, measure_arrows=False

)

[16]:

Prepare canonical bases measurements¶

Due to how the qubits are labeled when identifying unique 2Q layers, one must take special care to keep track of qubit ordering. Below, we introduce the notion of canonical_qubits as a means to appropriately update the qubit ordering when providing it to the executor, as a result of how qubit order is captured when boxing circuits and finding unique instructions. See the Qubit ordering convention

documentation for details.

[17]:

# Determine the canonical qubits order

meas_box = boxed_circuit.data[-1]

canonical_qubits = [

idx for idx, qubit in enumerate(boxed_circuit.qubits) if qubit in meas_box.qubits

]

# map canonical qubit to physical (isa) qubit

c_2_p = {c: p for c, p in enumerate(canonical_qubits)}

# map physical (isa) qubit to virtual qubit (index in original circuit)

p_2_v = {p: v for v, p in enumerate(layout)}

# compute map between virtual and canonical qubit indices.

c_2_v = {c: p_2_v[p] for c, p in c_2_p.items()}

assert len(c_2_v) == num_qubits

bases_canon = [

np.array([base_i[c_2_v[c]] for c in range(num_qubits)], dtype=np.uint8) for base_i in bases_virt

]

Workflow for lightcone shading, noise learning, and anti-noise injection¶

Note: For the implementation of SLC-PEC in this tutorial, we run SLC bound computations before the noise learning has been completed, so the to-be-mitigated circuit is run as close in time as possible to the learned noise model. In principle, this workflow can be further enhanced to execute simultaneously. Namely, a noise-learning job is run while, in parallel, noise bounds are estimated. For an arbitrary quantum circuit, the noise-bound computation can scale with a weakly exponential dependence. As such, it might be prudent to use parallelized execution when trying to maximize the workflow’s efficiency. To this end, we briefly demonstrate this by including cluster-based (128-thread) resources and showing how you can achieve a more refined set of bounds for a provided circuit when constrained to equal computation-time limits, compared to our laptop (8 threads). Furthermore, although not implemented in this workflow, you can parallelize the QPU executions for noise learning and noise-bound computations to achieve the most efficient workflow.

Predict to-be-learned noise-model Paulis¶

The generate_noise_model_paulis function goes through each boxed layer of the provided circuit and generates all relevant weight-one and weight-two Pauli terms, taking into account the circuit’s qubit connectivity, and selecting terms that are relevant to the active nodes and edges. These terms are then used to compute forward and backward noise bounds.

[18]:

noise_model_paulis = generate_noise_model_paulis(

unique_2q_instructions, backend.coupling_map, boxed_circuit

)

[19]:

noise_model_rates = {ref: None for ref in noise_model_paulis}

a. Compute and tighten forward bounds¶

The compute_forward_bounds function evaluates the commutation relations between the gates in each layer and the above-generated Pauli terms in terms of how forward-propagation errors affect the desired observable \(A\). For gates that commute with the Pauli terms, nothing is done. For Clifford gates, they are pushed toward the beginning of the circuit. For non-Clifford gates, we approximate their influence on the target observables to later be prioritized for noise cancelation (after all

bounds have been merged). This bound is achieved by first applying the L2 norm (namely, the square root of the sum of squares of the relevant Pauli-term coefficients). When there are too many qubit terms involved, we revert to a looser bound that uses the triangle inequality.

Laptop-level resources¶

[20]:

slc_atol = 1e-8

slc_eigval_max_qubits = 18

slc_evolution_max_terms = 1000

slc_num_processes = 8

slc_timeout = 60

[21]:

forward_bounds = compute_forward_bounds(

boxed_circuit,

noise_model_paulis,

isa_observable,

evolution_max_terms=slc_evolution_max_terms,

eigval_max_qubits=slc_eigval_max_qubits,

atol=slc_atol,

num_processes=slc_num_processes,

timeout=slc_timeout,

)

2025-11-10 11:20:04,344 INFO forward Evolving Pauli error terms forwards through the circuit.

2025-11-10 11:20:04,344 INFO forward Modelling errors as though they happen *after* each noise layer.

2025-11-10 11:20:04,345 INFO remove_measure Removing ANY Measure operations from the provided circuit!

2025-11-10 11:20:04,453 INFO circuit_iter Noisy box 'm39'

2025-11-10 11:20:05,254 INFO circuit_iter Noisy box 'm38'

2025-11-10 11:20:05,304 INFO circuit_iter Noisy box 'm37'

2025-11-10 11:20:05,382 INFO circuit_iter Noisy box 'm36'

2025-11-10 11:20:05,467 INFO circuit_iter Noisy box 'm35'

2025-11-10 11:20:05,580 INFO circuit_iter Noisy box 'm34'

2025-11-10 11:20:05,705 INFO circuit_iter Noisy box 'm33'

2025-11-10 11:20:05,857 INFO circuit_iter Noisy box 'm32'

2025-11-10 11:20:06,034 INFO circuit_iter Noisy box 'm31'

2025-11-10 11:20:06,221 INFO circuit_iter Noisy box 'm30'

2025-11-10 11:20:06,449 INFO circuit_iter Noisy box 'm29'

2025-11-10 11:20:06,724 INFO circuit_iter Noisy box 'm28'

2025-11-10 11:20:07,628 INFO circuit_iter Noisy box 'm27'

2025-11-10 11:20:09,110 INFO circuit_iter Noisy box 'm26'

2025-11-10 11:20:11,696 INFO circuit_iter Noisy box 'm25'

2025-11-10 11:20:16,100 INFO circuit_iter Noisy box 'm24'

2025-11-10 11:20:21,781 INFO circuit_iter Noisy box 'm23'

2025-11-10 11:20:30,244 INFO circuit_iter Noisy box 'm22'

2025-11-10 11:20:40,416 INFO circuit_iter Noisy box 'm21'

2025-11-10 11:20:53,437 INFO circuit_iter Noisy box 'm20'

2025-11-10 11:21:06,038 INFO circuit_iter Noisy box 'm19'

2025-11-10 11:21:06,038 WARNING commutator_bounds Bounds computation timed out.

2025-11-10 11:21:06,039 INFO circuit_iter Noisy box 'm18'

2025-11-10 11:21:06,039 INFO circuit_iter Noisy box 'm17'

2025-11-10 11:21:06,039 INFO circuit_iter Noisy box 'm16'

2025-11-10 11:21:06,040 INFO circuit_iter Noisy box 'm15'

2025-11-10 11:21:06,040 INFO circuit_iter Noisy box 'm14'

2025-11-10 11:21:06,040 INFO circuit_iter Noisy box 'm13'

2025-11-10 11:21:06,040 INFO circuit_iter Noisy box 'm12'

2025-11-10 11:21:06,041 INFO circuit_iter Noisy box 'm11'

2025-11-10 11:21:06,041 INFO circuit_iter Noisy box 'm10'

2025-11-10 11:21:06,041 INFO circuit_iter Noisy box 'm9'

2025-11-10 11:21:06,042 INFO circuit_iter Noisy box 'm8'

2025-11-10 11:21:06,042 INFO circuit_iter Noisy box 'm7'

2025-11-10 11:21:06,042 INFO circuit_iter Noisy box 'm6'

2025-11-10 11:21:06,042 INFO circuit_iter Noisy box 'm5'

2025-11-10 11:21:06,043 INFO circuit_iter Noisy box 'm4'

2025-11-10 11:21:06,043 INFO circuit_iter Noisy box 'm3'

2025-11-10 11:21:06,043 INFO circuit_iter Noisy box 'm2'

2025-11-10 11:21:06,043 INFO circuit_iter Noisy box 'm1'

2025-11-10 11:21:06,044 INFO circuit_iter Noisy box 'm0'

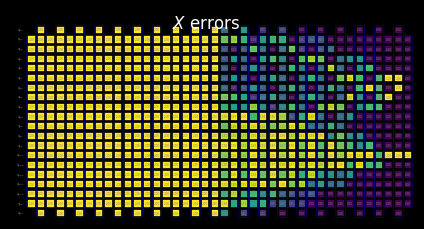

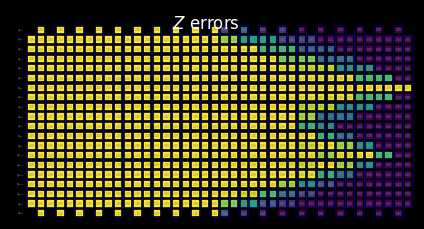

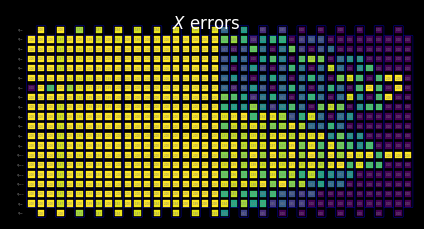

Visualize the SLC for manual inspection¶

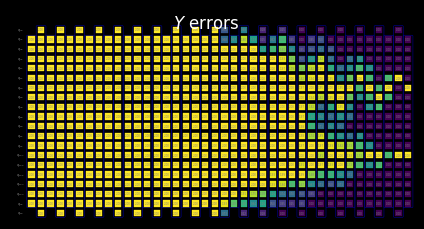

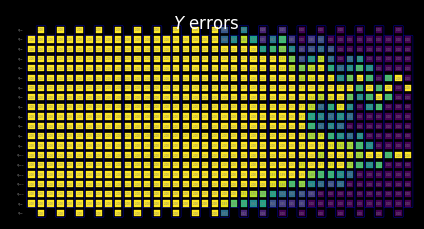

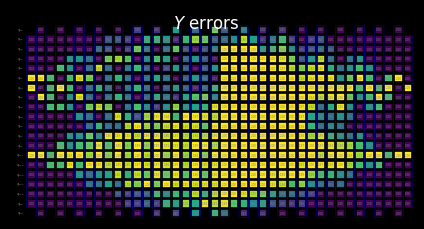

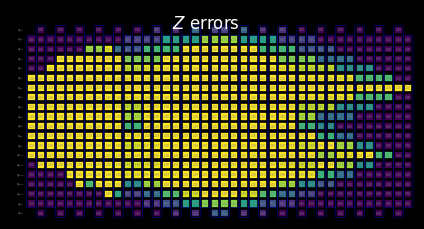

You can interpret the behavior of the shaded bounds by examining how the measurements and Pauli terms interact with the local errors. These patterns are characteristic of this kicked Ising Hamiltonian time-evolution problem and also appear in the paper Lightcone Shading for Classically Accelerated Quantum Error Mitigation, with several telltale features:

We can clearly distinguish the two cones arising from the two non-identity Paulis in the observable.

We can see that the X measurement on qubit 6 commutes with the X error in the rightmost layer.

We can see that the Z Pauli on qubit 13 commutes with the Z error in the rightmost layer.

When we reach the timeout specified above, the remaining layers to the left are filled entirely with trivial bounds of two.

[22]:

for p in "XYZ":

display(

draw_shaded_lightcone(

boxed_circuit,

forward_bounds,

noise_model_paulis,

pauli_filter=p,

scale=0.15,

fold=-1,

idle_wires=False,

wire_order=wire_order,

measure_arrows=False,

)

)

b. Compute and tighten forward bounds¶

We next tighten the bounds by using the tighten_with_speed_limit function, which tracks how the observable spreads backward through the circuit and uses that spread to place upper bounds on the effect of each noise operator, taking the smaller of the forward bound just computed, and the backward-propagation bound.

[23]:

forward_bounds_tighter = tighten_with_speed_limit(

forward_bounds, boxed_circuit, noise_model_paulis, isa_observable

)

2025-11-10 11:21:08,270 INFO speed_limit Tighting bounds using information propagation speed limits

2025-11-10 11:21:08,270 INFO speed_limit Modelling errors as though they happen *after* each noise layer.

2025-11-10 11:21:08,298 INFO remove_measure Removing ANY Measure operations from the provided circuit!

2025-11-10 11:21:08,310 INFO circuit_iter Noisy box 'm39'

2025-11-10 11:21:08,314 INFO circuit_iter Noisy box 'm38'

2025-11-10 11:21:08,317 INFO circuit_iter Noisy box 'm37'

2025-11-10 11:21:08,319 INFO circuit_iter Noisy box 'm36'

2025-11-10 11:21:08,323 INFO circuit_iter Noisy box 'm35'

2025-11-10 11:21:08,325 INFO circuit_iter Noisy box 'm34'

2025-11-10 11:21:08,328 INFO circuit_iter Noisy box 'm33'

2025-11-10 11:21:08,330 INFO circuit_iter Noisy box 'm32'

2025-11-10 11:21:08,334 INFO circuit_iter Noisy box 'm31'

2025-11-10 11:21:08,336 INFO circuit_iter Noisy box 'm30'

2025-11-10 11:21:08,338 INFO circuit_iter Noisy box 'm29'

2025-11-10 11:21:08,340 INFO circuit_iter Noisy box 'm28'

2025-11-10 11:21:08,344 INFO circuit_iter Noisy box 'm27'

2025-11-10 11:21:08,346 INFO circuit_iter Noisy box 'm26'

2025-11-10 11:21:08,349 INFO circuit_iter Noisy box 'm25'

2025-11-10 11:21:08,351 INFO circuit_iter Noisy box 'm24'

2025-11-10 11:21:08,355 INFO circuit_iter Noisy box 'm23'

2025-11-10 11:21:08,357 INFO circuit_iter Noisy box 'm22'

2025-11-10 11:21:08,360 INFO circuit_iter Noisy box 'm21'

2025-11-10 11:21:08,362 INFO circuit_iter Noisy box 'm20'

2025-11-10 11:21:08,367 INFO circuit_iter Noisy box 'm19'

2025-11-10 11:21:08,369 INFO circuit_iter Noisy box 'm18'

2025-11-10 11:21:08,372 INFO circuit_iter Noisy box 'm17'

2025-11-10 11:21:08,375 INFO circuit_iter Noisy box 'm16'

2025-11-10 11:21:08,378 INFO circuit_iter Noisy box 'm15'

2025-11-10 11:21:08,380 INFO circuit_iter Noisy box 'm14'

2025-11-10 11:21:08,383 INFO circuit_iter Noisy box 'm13'

2025-11-10 11:21:08,386 INFO circuit_iter Noisy box 'm12'

2025-11-10 11:21:08,389 INFO circuit_iter Noisy box 'm11'

2025-11-10 11:21:08,391 INFO circuit_iter Noisy box 'm10'

2025-11-10 11:21:08,394 INFO circuit_iter Noisy box 'm9'

2025-11-10 11:21:08,396 INFO circuit_iter Noisy box 'm8'

2025-11-10 11:21:08,399 INFO circuit_iter Noisy box 'm7'

2025-11-10 11:21:08,401 INFO circuit_iter Noisy box 'm6'

2025-11-10 11:21:08,404 INFO circuit_iter Noisy box 'm5'

2025-11-10 11:21:08,406 INFO circuit_iter Noisy box 'm4'

2025-11-10 11:21:08,410 INFO circuit_iter Noisy box 'm3'

2025-11-10 11:21:08,412 INFO circuit_iter Noisy box 'm2'

2025-11-10 11:21:08,415 INFO circuit_iter Noisy box 'm1'

2025-11-10 11:21:08,417 INFO circuit_iter Noisy box 'm0'

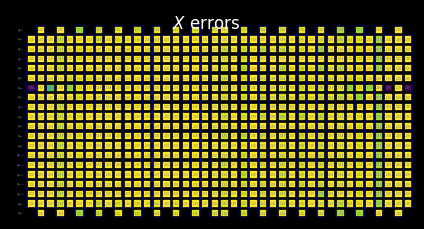

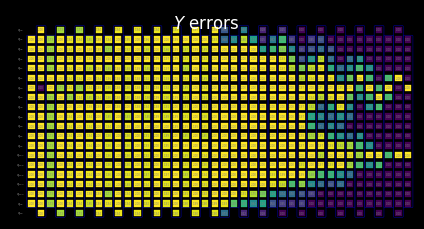

Visualize the SLC for manual inspection¶

We can further tighten the bounds by accounting for lightcone limitation. In principle, this gives us a smoother transition from the computed bounds to the trivial bounds set forth after the timeout was reached. Here, the effect is not as visible because the lightcones have already reached the edge of the circuit.

[24]:

for p in "XYZ":

display(

draw_shaded_lightcone(

boxed_circuit,

forward_bounds_tighter,

noise_model_paulis,

pauli_filter=p,

scale=0.15,

fold=-1,

idle_wires=False,

wire_order=wire_order,

measure_arrows=False,

)

)

c. Compute backward bounds¶

This part of noise prediction evaluates how an error at a particular layer can affect the input state \(\rho\). The compute_backward_bounds function first inverts the circuit, removes measurement gates, and then proceeds with a similar analysis as was done for the forward-bound computations.

[25]:

backward_bounds = compute_backward_bounds(

boxed_circuit,

noise_model_paulis,

evolution_max_terms=slc_evolution_max_terms,

num_processes=slc_num_processes,

timeout=slc_timeout,

)

2025-11-10 11:21:10,666 INFO backward Evolving Pauli error terms backwards through the circuit.

2025-11-10 11:21:10,666 INFO backward Modelling errors as though they happen *after* each noise layer.

2025-11-10 11:21:10,667 INFO remove_measure Removing ANY Measure operations from the provided circuit!

2025-11-10 11:21:10,774 INFO circuit_iter Noisy box 'm0'

2025-11-10 11:21:11,640 INFO circuit_iter Noisy box 'm1'

2025-11-10 11:21:11,681 INFO circuit_iter Noisy box 'm2'

2025-11-10 11:21:11,867 INFO circuit_iter Noisy box 'm3'

2025-11-10 11:21:12,078 INFO circuit_iter Noisy box 'm4'

2025-11-10 11:21:12,329 INFO circuit_iter Noisy box 'm5'

2025-11-10 11:21:12,637 INFO circuit_iter Noisy box 'm6'

2025-11-10 11:21:13,110 INFO circuit_iter Noisy box 'm7'

2025-11-10 11:21:13,705 INFO circuit_iter Noisy box 'm8'

2025-11-10 11:21:14,384 INFO circuit_iter Noisy box 'm9'

2025-11-10 11:21:15,213 INFO circuit_iter Noisy box 'm10'

2025-11-10 11:21:15,946 INFO circuit_iter Noisy box 'm11'

2025-11-10 11:21:16,754 INFO circuit_iter Noisy box 'm12'

2025-11-10 11:21:17,557 INFO circuit_iter Noisy box 'm13'

2025-11-10 11:21:18,447 INFO circuit_iter Noisy box 'm14'

2025-11-10 11:21:19,453 INFO circuit_iter Noisy box 'm15'

2025-11-10 11:21:20,472 INFO circuit_iter Noisy box 'm16'

2025-11-10 11:21:21,479 INFO circuit_iter Noisy box 'm17'

2025-11-10 11:21:22,660 INFO circuit_iter Noisy box 'm18'

2025-11-10 11:21:23,705 INFO circuit_iter Noisy box 'm19'

2025-11-10 11:21:24,849 INFO circuit_iter Noisy box 'm20'

2025-11-10 11:21:26,030 INFO circuit_iter Noisy box 'm21'

2025-11-10 11:21:27,111 INFO circuit_iter Noisy box 'm22'

2025-11-10 11:21:28,354 INFO circuit_iter Noisy box 'm23'

2025-11-10 11:21:29,554 INFO circuit_iter Noisy box 'm24'

2025-11-10 11:21:30,897 INFO circuit_iter Noisy box 'm25'

2025-11-10 11:21:32,113 INFO circuit_iter Noisy box 'm26'

2025-11-10 11:21:33,622 INFO circuit_iter Noisy box 'm27'

2025-11-10 11:21:34,962 INFO circuit_iter Noisy box 'm28'

2025-11-10 11:21:36,504 INFO circuit_iter Noisy box 'm29'

2025-11-10 11:21:38,021 INFO circuit_iter Noisy box 'm30'

2025-11-10 11:21:39,750 INFO circuit_iter Noisy box 'm31'

2025-11-10 11:21:41,237 INFO circuit_iter Noisy box 'm32'

2025-11-10 11:21:42,974 INFO circuit_iter Noisy box 'm33'

2025-11-10 11:21:44,527 INFO circuit_iter Noisy box 'm34'

2025-11-10 11:21:46,535 INFO circuit_iter Noisy box 'm35'

2025-11-10 11:21:48,152 INFO circuit_iter Noisy box 'm36'

2025-11-10 11:21:50,074 INFO circuit_iter Noisy box 'm37'

2025-11-10 11:21:51,814 INFO circuit_iter Noisy box 'm38'

2025-11-10 11:21:53,943 INFO circuit_iter Noisy box 'm39'

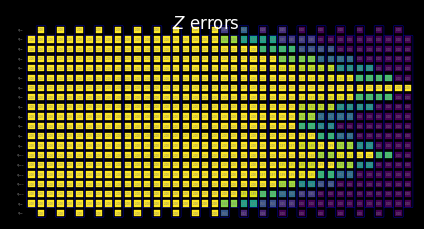

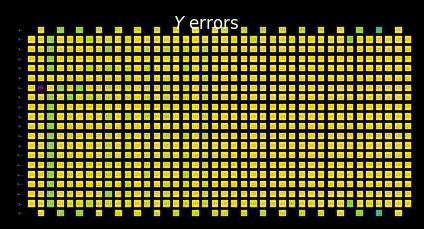

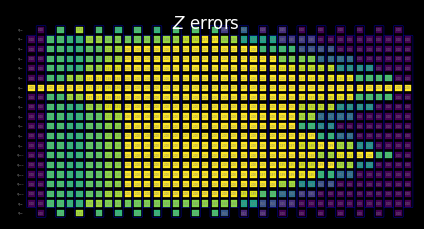

Visualize the SLC for manual inspection¶

From computing backward bounds, we can see how the initial state structure governs the early behavior of error propagation:

We can clearly see how the Z errors initially commute with the |0⟩ initial state.

Only on qubit 6, where we initialize the +1 eigenstate of the X basis, does a Z error fail to commute, while an X error does commute.

[26]:

for p in "XYZ":

display(

draw_shaded_lightcone(

boxed_circuit,

backward_bounds,

noise_model_paulis,

pauli_filter=p,

scale=0.15,

fold=-1,

idle_wires=False,

wire_order=wire_order,

measure_arrows=False,

)

)

Preview merged bounds without learned noise rates¶

The merged_bounds function determines the point in the circuit where switching from backward bounds to forward bounds minimizes the total estimated bias on the desired observable. This bias is computed as the sum of the backward-bound contributions for all noise locations before that point, plus the forward-bound contributions for all noise locations after it. Currently, this is done uniformly for all qubits.

Important Note: The point to switch from forward to backward bounds depends on the learned noise rates.

[27]:

merged_bounds = merge_bounds(

boxed_circuit,

forward_bounds_tighter,

backward_bounds,

noise_model_rates,

)

2025-11-10 11:21:58,304 WARNING merge Missing noise rates. Partitioning backward/forward commutator bounds by assuming uniform error rates.

2025-11-10 11:21:58,305 WARNING merge Optimal spacetime partitioning not implemented!Just partitioning list of noisy boxes.

2025-11-10 11:21:58,305 INFO merge Determined Box idx for partitioning to be 20.

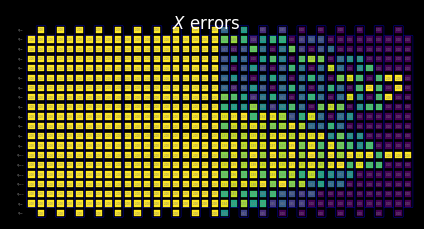

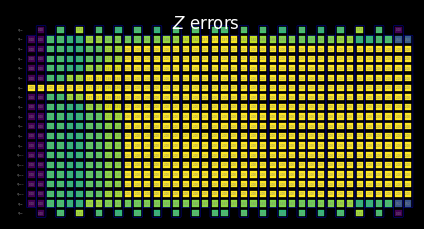

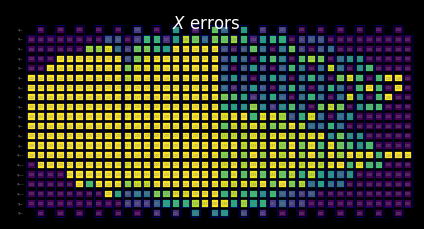

Visualize the SLC for manual inspection¶

After merging the backward and tightened forward bounds, the behavior of the combined SLCs becomes clear:

The function above tells us that a partition is chosen at which the switch from backward to tightened forward bounds takes place.

We can see below that the SLCs now contain partial backward and partial tightened forward bounds.

[28]:

for p in "XYZ":

display(

draw_shaded_lightcone(

boxed_circuit,

merged_bounds,

noise_model_paulis,

pauli_filter=p,

scale=0.15,

fold=-1,

idle_wires=False,

wire_order=wire_order,

measure_arrows=False,

)

)

Cluster-level resources¶

Here, we demonstrate how utilizing 128 threads on a cluster allows us to propagate through a more substantial portion of this larger circuit when limited to the same compute time as our laptop.

[29]:

with open("exp_data/merged_bounds_cluster.pickle", "rb") as file:

merged_bounds_cluster = pickle.load(file)

[30]:

for p in "XYZ":

display(

draw_shaded_lightcone(

boxed_circuit,

merged_bounds_cluster,

noise_model_paulis,

pauli_filter=p,

scale=0.15,

fold=-1,

idle_wires=False,

wire_order=wire_order,

measure_arrows=False,

)

)

Step 3. Execute¶

In this section we begin the part of the workflow that uses a real quantum device. For this learning-based error mitigation method, there are two steps to this:

Learn the noise by using

NoiseLeanerV3.Execute an error mitigation circuit with the new Samplomatic and Estimator framework.

With the bounded errors from our quantum circuit, we must learn the associated noise rates to prioritize our error budget, determine the sampling overhead, and execute on a QPU. Further, with this noise rate information, we can also highlight how, by utilizing the strong compute resources from our cluster, we reduce sampling overhead while maintaining the same residual bias.

a. Learn noise rates¶

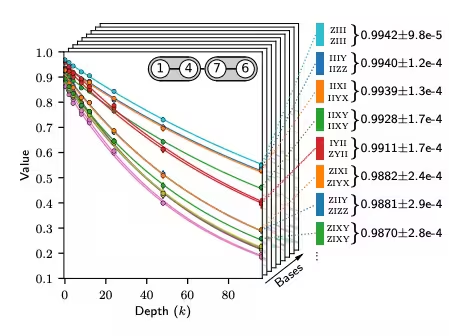

The noise learner allows characterization of the noise processes affecting the gates in one or more circuits of interest, based on the Pauli-Lindblad noise model described in the Probabilistic error cancellation with sparse Pauli-Lindblad models on noisy quantum processors paper. The run() method launches a noise-learning job for the provided unique 2-qubit layers, based on the options specified in the noise-learner configuration. In these options, you

can adjust the Pauli-twirling strategy, which helps ensure that the hardware is well described by the Pauli-Lindblad noise model.

Details of your noise model are at risk of drifting over time. As such, we set a parameter to ensure the learned noise model is recomputed for experiments older than four hours. This is a rough rule-of-thumb and should be considered carefully when applying it to your own work.

[ ]:

post_selection_enabled = True

load_cached_noise_results = True

[ ]:

noise_learner_options = NoiseLearnerV3Options(

num_randomizations=64,

shots_per_randomization=128,

layer_pair_depths=[1, 2, 4, 8, 12, 16, 24, 32, 40, 48],

post_selection={

"enable": post_selection_enabled,

"strategy": "edge",

"x_pulse_type": "rx",

},

)

noise_learner = NoiseLearnerV3(backend, noise_learner_options)

[ ]:

if load_cached_noise_results:

noise_learner_job = shared_service.job("d46ssf71gh7s7398k9a0")

else:

noise_learner_job = noise_learner.run(unique_2q_instructions)

[35]:

noise_learner_result = noise_learner_job.result()

[ ]:

if post_selection_enabled:

print("Minimum fraction of shots kept for noise learning experiments: ", end="")

print(

f"{min([min(d.values()) for d in [nlr.metadata['post_selection']['fraction_kept'] for nlr in noise_learner_result[:2]]]):.2f}"

)

Minimum fraction of shots kept for noise learning experiments: 0.58

[37]:

# Get a dict mapping InjectNoise.ref to QubitSparsePaulilist

refs_2_plm = noise_learner_result.to_dict(unique_2q_instructions, require_refs=False)

b.i. Update merged bounds with actual learned noise rates¶

Now that the specific noise model has been learned, we can apply the learned noise rates to the predicted noise bounds and obtain a final determination of which bounds have the most impact on minimizing the bias.

[38]:

merged_bounds = merge_bounds(

boxed_circuit,

forward_bounds_tighter,

backward_bounds,

refs_2_plm,

)

2025-11-10 11:22:03,755 WARNING merge Optimal spacetime partitioning not implemented!Just partitioning list of noisy boxes.

2025-11-10 11:22:03,756 INFO merge Determined Box idx for partitioning to be 20.

b.ii. Compute the local_scales for the hardware execution¶

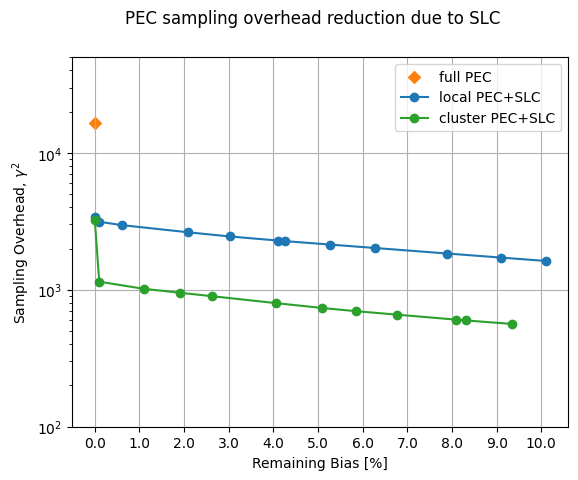

compute_local_scales looks at each possible noise error in the circuit and estimates how much that error could bias the final measurement, as well as how expensive it would be to correct it. It then ranks the errors by how worthwhile they are to mitigate and selects the subset that reduces bias as much as possible, while staying within the allowed sampling-cost budget (or achieving a desired accuracy). The result is a set of scaling factors indicating which errors will be actively mitigated

and which will be left unmitigated (local_scales), along with the predicted total sampling cost overhead (sampling_costs) and remaining bias (residual_bias_bound).

The ability to control the desired remaining bias is a critical feature of the SLC implementation of PEC. Whereas in the original implementation, the sampling overhead always targeted zero bias, we can tune the required sampling overhead with a trade-off in the expected remaining bias. This helps let the user stay within a fixed sampling budget, which can be particularly useful when initially prototyping a workflow.

[39]:

id_map = map_modifier_ref_to_ref(boxed_circuit)

[40]:

summed_rates = 0.0

for _box_id, noise_id in id_map.items():

learned_plm = refs_2_plm[noise_id]

summed_rates += np.sum(learned_plm.rates)

# print(f"{_box_id}:\tgamma = {np.exp(2 * summed_rates):1.6e}\tsampling cost = {np.exp(4 * summed_rates):1.6e}")

total_gamma = np.exp(2 * summed_rates)

print(f"Full PEC gamma={total_gamma}, sampling cost (gamma^2) = {total_gamma**2}")

Full PEC gamma=128.56055005423153, sampling cost (gamma^2) = 16527.81503024657

[41]:

biases = []

costs = []

for bias in [0.0, *np.arange(0.001, 0.102, 0.01).tolist()]:

_, cost_, bias_ = compute_local_scales(

boxed_circuit,

merged_bounds,

refs_2_plm,

sampling_cost_budget=np.inf,

bias_tolerance=bias,

)

biases.append(bias_)

costs.append(cost_)

[42]:

biases_cluster = []

costs_cluster = []

for bias in [0.0, *np.arange(0.001, 0.102, 0.01).tolist()]:

_, cost_, bias_ = compute_local_scales(

boxed_circuit,

merged_bounds_cluster,

refs_2_plm,

sampling_cost_budget=np.inf,

bias_tolerance=bias,

)

biases_cluster.append(bias_)

costs_cluster.append(cost_)

Benefits of clusters for reducing sampling overhead for a given classical compute time¶

[43]:

xticks = np.arange(0, 11)

fig, ax = plt.subplots()

ax.scatter([0], [total_gamma**2], marker="D", c="tab:orange", label="full PEC")

ax.plot(100 * np.array(biases), np.array(costs), "o-", c="tab:blue", label="local PEC+SLC")

ax.plot(

100 * np.array(biases_cluster),

np.array(costs_cluster),

"o-",

c="tab:green",

label="cluster PEC+SLC",

)

ax.set_yscale("log")

ax.set_ylim([100, 50000])

ax.set_xticks(xticks, [f"{x:.1f}" for x in xticks])

ax.set_xlabel("Remaining Bias [%]")

ax.set_ylabel(r"Sampling Overhead, $\gamma^2$")

ax.grid()

ax.legend()

fig.suptitle("PEC sampling overhead reduction due to SLC")

[43]:

Text(0.5, 0.98, 'PEC sampling overhead reduction due to SLC')

[44]:

chosen_bias_thres = 0.1

[45]:

local_scales, sampling_cost, residual_bias_bound = compute_local_scales(

boxed_circuit,

merged_bounds_cluster,

refs_2_plm,

sampling_cost_budget=np.inf,

bias_tolerance=chosen_bias_thres,

)

print(

f"PEC+SLC sampling cost (gamma^2) = {sampling_cost} w/ remaining bias = {100 * residual_bias_bound:.1f}%"

)

PEC+SLC sampling cost (gamma^2) = 563.1803982530477 w/ remaining bias = 9.3%

c. Execute the circuit of interest with antinoise¶

c.i. Prepare template circuit by using samplex¶

The samplex is an output of the build method of Samplomatic, which encodes all the information that is required to generate randomized parameters for template_circuit. These are then used to set up the QuantumProgram objects, which are in turn run on a QPU with the Executor primitive. Each QuantumProgram can contain several items, which you can think of as a pair of template and samplex.

See the Hello samplomatic tutorial for details.

[46]:

# Build template circuit and samplex for later use with the "Executor"

template_circuit, samplex = samplomatic.build(boxed_circuit)

[47]:

# Set up postselection if it's been enabled

if post_selection_enabled:

# Set up post selection PM (to add PS instructions)

post_selection_pm = PassManager(

[

AddSpectatorMeasures(backend.coupling_map),

AddPostSelectionMeasures(x_pulse_type="rx"),

]

)

final_template_circuit = post_selection_pm.run(template_circuit)

else:

final_template_circuit = template_circuit

2025-11-10 11:22:04,839 INFO base_tasks Pass: AddSpectatorMeasures - 3.41392 (ms)

2025-11-10 11:22:04,843 INFO base_tasks Pass: AddPostSelectionMeasures - 2.88510 (ms)

c.ii. Set up the QuantumProgram¶

[48]:

num_randomizations = 4096

shots_per_randomization = 64

chunk_size = 256

[49]:

# Set up QuantumProgram

program = QuantumProgram(shots=shots_per_randomization, noise_maps=refs_2_plm)

# no EM

# Collect up a dict of the other arguments that need to be bound to samplex_inputs

samplex_inputs = {f"noise_scales.{ref}": float(0) for ref in local_scales}

samplex_inputs |= {"basis_changes": {"basis0": bases_canon[0]}}

# Convert samplex_inputs into a dict to pass to QuantumProgram

samplex_arguments = samplex.inputs().bind(**samplex_inputs).make_broadcastable()

program.append(

circuit=final_template_circuit,

samplex=samplex,

samplex_arguments=samplex_arguments,

shape=(num_randomizations,),

chunk_size=chunk_size,

)

# plain PEC

# Collect a dict of the other arguments that need to be bound to samplex_inputs

samplex_inputs = {f"noise_scales.{ref}": float(-1) for ref in local_scales}

samplex_inputs |= {"basis_changes": {"basis0": bases_canon[0]}}

# Convert samplex_inputs into a dict to pass to QuantumProgram

samplex_arguments = samplex.inputs().bind(**samplex_inputs).make_broadcastable()

program.append(

circuit=final_template_circuit,

samplex=samplex,

samplex_arguments=samplex_arguments,

shape=(num_randomizations,),

chunk_size=chunk_size,

)

# PEC+SLC

# Collect a dict of the other arguments that need to be bound to samplex_inputs

samplex_inputs = {f"noise_scales.{ref}": float(-1) for ref in local_scales}

samplex_inputs |= {"basis_changes": {"basis0": bases_canon[0]}}

samplex_inputs |= {"local_scales": local_scales}

# Convert samplex_inputs into a dict to pass to QuantumProgram

samplex_arguments = samplex.inputs().bind(**samplex_inputs).make_broadcastable()

program.append(

circuit=final_template_circuit,

samplex=samplex,

samplex_arguments=samplex_arguments,

shape=(num_randomizations,),

chunk_size=chunk_size,

)

c.iii. Execute program with the Executor primitive¶

[50]:

executor = Executor(backend)

[51]:

load_cached_executor_results = True

[ ]:

if load_cached_executor_results:

job_exec = shared_service.job("d46t1q6qsa9s73cb28g0")

else:

job_exec = executor.run(program)

[53]:

results_exec = job_exec.result()

Step 4. Post-process¶

As we calculate the final expectation value of interest by using expectation_values, we will implement a few beneficial post-processing techniques to help ensure we obtain the highest-quality results possible. First, we apply our twirled readout mitigation, TREX, which accounts for any errors occurring during the readout process. Then, we fix errors due to

non-Markovian noise on our Heron backends by using a post-selection method. This method measures active and spectator qubits, then applies a slow rotation to each qubit, and then measures again. In instances where the two measurements do not confirm a flipped qubit as expected, these shots are discarded by applying a mask from the PostSelector function. Within the mask computation, a specific strategy can be set to filter based on single-qubit nodes or neighboring spectator edges, which

can influence both the number of shots filtered out and the quality of the results.

[54]:

measurement_noise_map = noise_learner_result[2].to_pauli_lindblad_map()

trex_scale_factors = trex_factors(measurement_noise_map, reverser_virt)

[55]:

post_selection_strategy = "node"

[ ]:

def post_process_conv(datum, steps=16, gamma=None, ps=False, trex=False):

meas = datum["meas"]

flips = datum["measurement_flips.meas"]

signs = datum.get("pauli_signs", None)

meas_basis_axis = None

avg_axis = 0

mask = None

if ps and post_selection_enabled:

# Post-select the results

post_selector = PostSelector.from_circuit(

circuit=final_template_circuit, coupling_map=backend.coupling_map

)

# Compute the ps mask for filtering results

mask = post_selector.compute_mask(datum, strategy=post_selection_strategy)

# Compute fraction of shots kept from post selection

total_num_shots = num_randomizations * shots_per_randomization

ps_ratio = np.sum(mask) * 100 / total_num_shots / len(bases_canon)

print(

f"With {post_selection_strategy}-based post selection ({ps_ratio:.1f}% of shots kept):"

)

results = []

for i in range(steps, num_randomizations + 1, steps):

# Compute mitigated expvals w/out postselectoion

res = executor_expectation_values(

meas[:i],

reverser_virt,

meas_basis_axis,

avg_axis=avg_axis,

measurement_flips=flips[:i],

pauli_signs=signs[:i] if signs is not None else None,

postselect_mask=mask[:i] if mask is not None else None,

rescale_factors=trex_scale_factors if trex else None,

gamma_factor=gamma,

)

results.append(res[0])

return results

[57]:

gamma_pec = gamma_from_noisy_boxes(refs_2_plm, id_map)

gamma_slc = gamma_from_noisy_boxes(refs_2_plm, id_map, local_scales)

[58]:

steps = 16

[59]:

results = {}

for label, result_idx, gamma, use_ps, use_trex in [

("PEC", 1, gamma_pec, True, True),

("PEC+SLC", 2, gamma_slc, True, True),

("Unmitigated", 0, None, False, False),

]:

res = post_process_conv(

results_exec[result_idx], steps=steps, gamma=gamma, ps=use_ps, trex=use_trex

)

results[label] = res

With node-based post selection (27.0% of shots kept):

With node-based post selection (26.8% of shots kept):

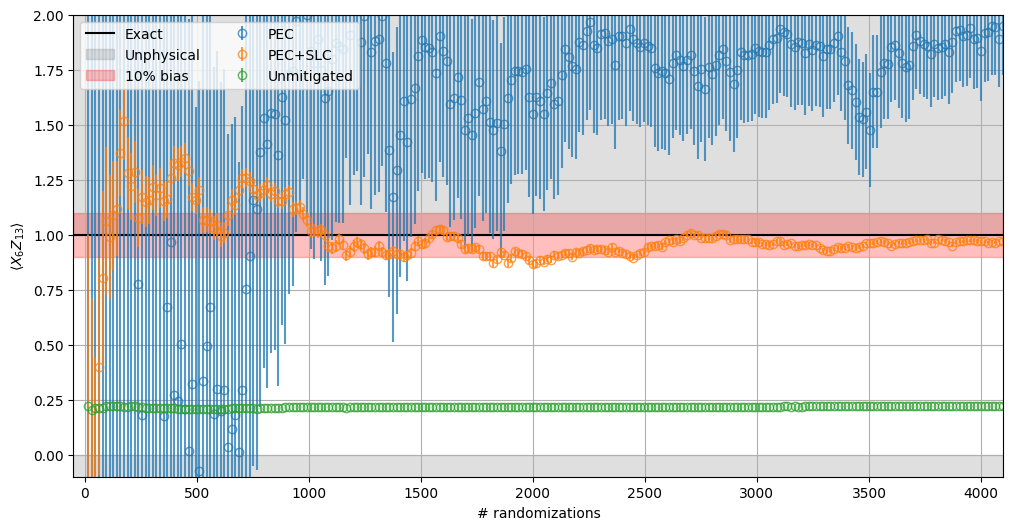

From examination of the experimental results, we can directly compare the behavior of different approaches: PEC, PEC combined with SLC, and the baseline of unmitigated results. Some specific details to highlight:

Unmitigated results remain outside the desired bias range and are unaffected by sampling overhead.

Given the high sampling cost computed above (~10k), PEC alone does not converge within the limits of randomization used.

PEC + SLC, in contrast, converges much more quickly.

The error bounds also decrease significantly faster for PEC + SLC than for plain PEC.

[60]:

fig, ax = plt.subplots(1, 1, figsize=(12, 6))

ax.axhline(1.0, color="black", label="Exact")

ax.fill_between([-50, 4100], -10, 0, color="grey", alpha=0.25, label="Unphysical")

ax.fill_between([-50, 4100], 1, 10, color="grey", alpha=0.25)

ax.fill_between([-50, 4100], 0.9, 1.1, color="red", alpha=0.25, label="10% bias")

for label, res in results.items():

ax.errorbar(

list(range(steps, num_randomizations + 1, steps)),

[r[0] for r in res],

yerr=[r[1] for r in res],

alpha=0.75,

marker="o",

linestyle="",

markerfacecolor="none",

label=label,

)

ax.set_ylabel(r"$\langle X_{6}Z_{13}\rangle$")

ax.set_xlabel("# randomizations")

ax.grid()

ax.legend(ncols=2)

ax.set_ylim([-0.1, 2.0])

ax.set_xlim([-50, 4100])

[60]:

(-50.0, 4100.0)