Utility-scale error mitigation with probabilistic error amplification

Usage estimate: 16 minutes on ibm_kingston (NOTE: This is an estimate only. Your runtime may vary.)

Background

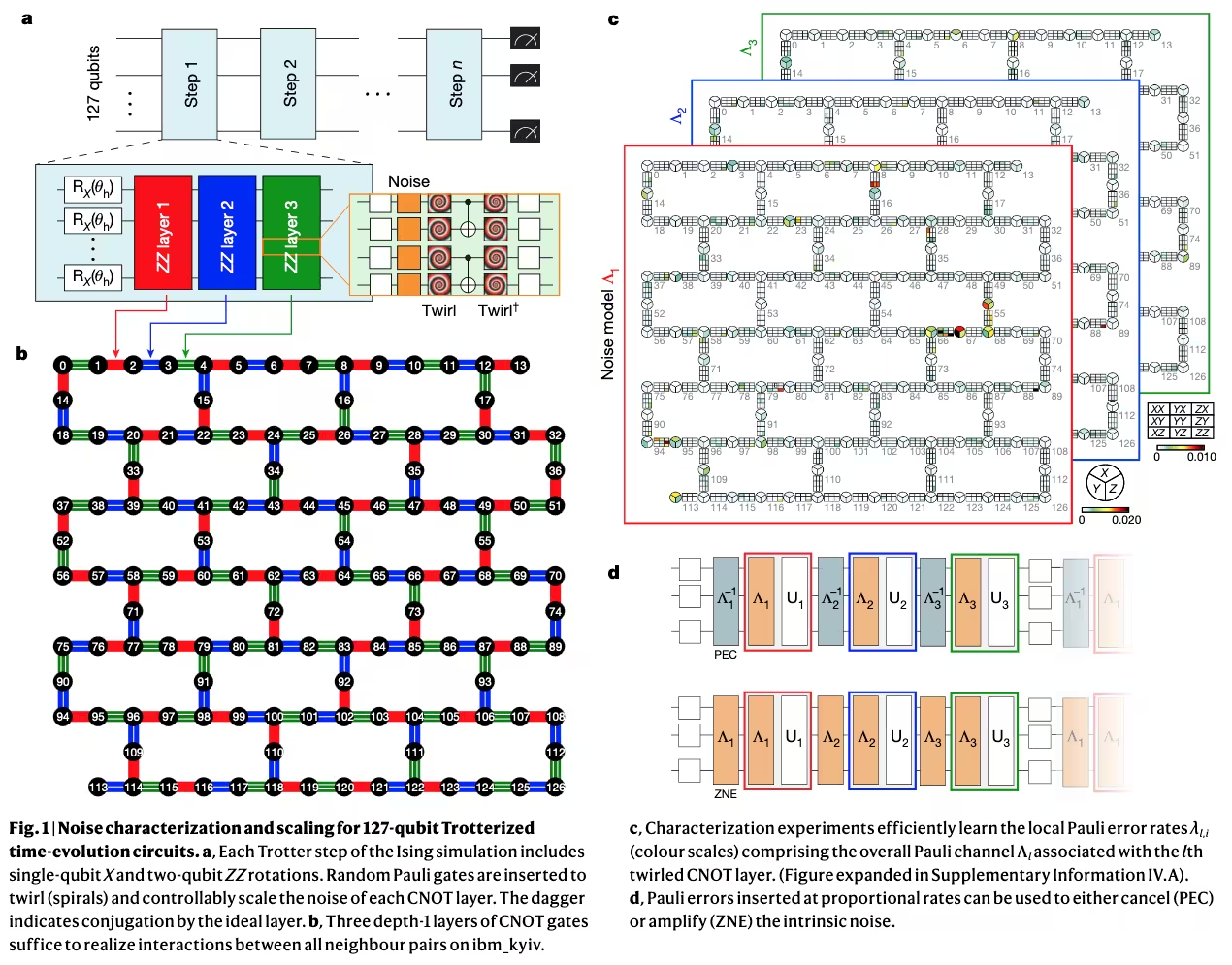

The tutorial demonstrates how to run a utility-scale error mitigation experiment with Qiskit Runtime using an experimental version of zero noise extrapolation (ZNE) with probabilistic error amplification (PEA).

Reference: Y. Kim et al. Evidence for the utility of quantum computing before fault tolerance. Nature 618.7965 (2023)

Reference: Y. Kim et al. Evidence for the utility of quantum computing before fault tolerance. Nature 618.7965 (2023)

Zero-Noise Extrapolation (ZNE)

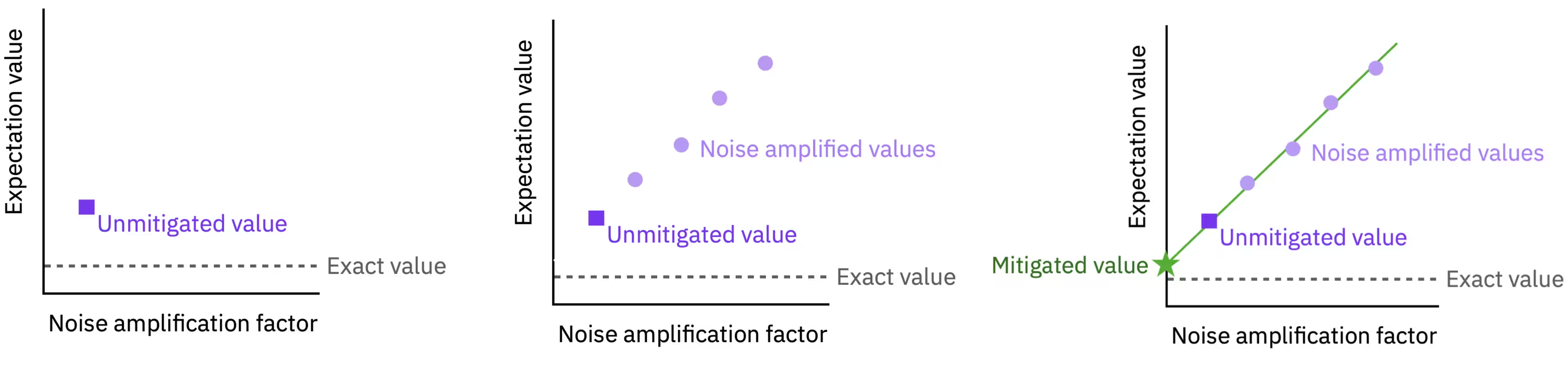

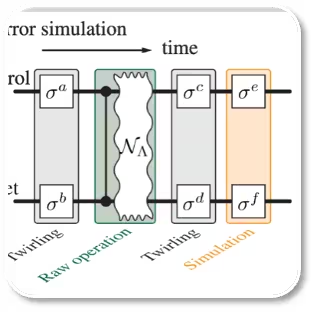

Zero-noise extrapolation (ZNE) is an error mitigation technique that removes the effects of an unknown noise during circuit execution that can be scaled in a known way.

It assumes expectation values scale with noise by a known function

where parameterizes the noise strength and can be amplified.

We can implement ZNE with the following steps:

- Amplify circuit noise for several noise factors

- Run every noise-amplified circuit to measure

- Extrapolate back to the zero-noise limit

Amplify noise for ZNE

The main challenge in successfully implementing ZNE is to have an accurate model for noise in the expectation value and to amplify the noise in a known way.

There are three common ways error amplification is implemented for ZNE.

| Pulse stretching | Gate folding | Probabilistic error amplification |

|---|---|---|

| Scale pulse duration via calibration | Repeat gates in identity cycles | Add noise via sampling Pauli channels |

|  |  |

| Kandala et al. Nature (2019) | Shultz et al. PRA (2022) | Li & Benjamin PRX (2017) |

For utility-scale experiments, probabilistic error amplification (PEA) is the most attractive.

- Pulse stretching assumes gate noise is proportional to duration, which is typically not true. Calibration is also costly.

- Gate folding requires large stretch factors that greatly limit the depth of circuits that can be run.

- PEA can be applied to any circuit that can be run with native noise factor () but requires learning the noise model.

Learn the noise model for PEA

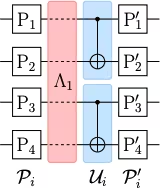

PEA assumes the same layer-based noise model as probabilistic error cancellation (PEC); however, it avoids the sampling overhead that scales exponentially with the circuit noise.

| Step 1 | Step 2 | Step 3 |

|---|---|---|

| Pauli twirl layers of two-qubit gates | Repeat identity pairs of layers and learn the noise | Derive a fidelity (error for each noise channel) |

|  |  |

Reference: E. van den Berg, Z. Minev, A. Kandala, and K. Temme, Probabilistic error cancellation with sparse Pauli-Lindblad models on noisy quantum processors arXiv:2201.09866

Requirements

Before starting this tutorial, ensure that you have the following installed:

- Qiskit SDK 1.0 or later with Visualization support (

pip install 'qiskit[visualization]') - Qiskit Runtime 0.22 or later (

pip install qiskit-ibm-runtime)

Setup

from __future__ import annotations

from collections.abc import Sequence

from collections import defaultdict

import numpy as np

import rustworkx

import matplotlib.pyplot as plt

from qiskit.circuit import QuantumCircuit, Parameter

from qiskit.circuit.library import CXGate, CZGate, ECRGate

from qiskit.providers import Backend

from qiskit.visualization import plot_error_map

from qiskit.transpiler.preset_passmanagers import generate_preset_pass_manager

from qiskit.quantum_info import SparsePauliOp

from qiskit.primitives import PubResult

from qiskit_ibm_runtime import QiskitRuntimeService

from qiskit_ibm_runtime import EstimatorV2 as EstimatorStep 1: Map classical inputs to a quantum problem

Create a parameterized Ising model circuit

First, choose a backend to run on. This demonstration runs on a 127-qubit backend, but you can modify this to any backend available to you.

service = QiskitRuntimeService()

backend = service.least_busy(

operational=True, simulator=False, min_num_qubits=127

)

backendOutput:

<IBMBackend('ibm_kingston')>

Helper functions for circuit construction

Next, create some helper functions to construct the circuits for the Trotterized time evolution of a two-dimensional transverse-field Ising model that adheres to the topology of the backend.

"""Trotter circuit generation"""

def remove_qubit_couplings(

couplings: Sequence[tuple[int, int]], qubits: Sequence[int] | None = None

) -> list[tuple[int, int]]:

"""Remove qubits from a coupling list.

Args:

couplings: A sequence of qubit couplings.

qubits: Optional, the qubits to remove.

Returns:

The input couplings with the specified qubits removed.

"""

if qubits is None:

return couplings

qubits = set(qubits)

return [edge for edge in couplings if not qubits.intersection(edge)]

def coupling_qubits(

*couplings: Sequence[tuple[int, int]],

allowed_qubits: Sequence[int] | None = None,

) -> list[int]:

"""Return a sorted list of all qubits involved in 1 or more couplings lists.

Args:

couplings: 1 or more coupling lists.

allowed_qubits: Optional, the allowed qubits to include. If None all

qubits are allowed.

Returns:

The intersection of all qubits in the couplings and the allowed qubits.

"""

qubits = set()

for edges in couplings:

for edge in edges:

qubits.update(edge)

if allowed_qubits is not None:

qubits = qubits.intersection(allowed_qubits)

return list(qubits)

def construct_layer_couplings(

backend: Backend,

) -> list[list[tuple[int, int]]]:

"""Separate a coupling map into disjoint 2-qubit gate layers.

Args:

backend: A backend to construct layer couplings for.

Returns:

A list of disjoint layers of directed couplings for the input coupling map.

"""

coupling_graph = backend.coupling_map.graph.to_undirected(

multigraph=False

)

edge_coloring = rustworkx.graph_bipartite_edge_color(coupling_graph)

layers = defaultdict(list)

for edge_idx, color in edge_coloring.items():

layers[color].append(

coupling_graph.get_edge_endpoints_by_index(edge_idx)

)

layers = [sorted(layers[i]) for i in sorted(layers.keys())]

return layers

def entangling_layer(

gate_2q: str,

couplings: Sequence[tuple[int, int]],

qubits: Sequence[int] | None = None,

) -> QuantumCircuit:

"""Generating a entangling layer for the specified couplings.

This corresponds to a Trotter layer for a ZZ Ising term with angle Pi/2.

Args:

gate_2q: The 2-qubit basis gate for the layer, should be "cx", "cz", or "ecr".

couplings: A sequence of qubit couplings to add CX gates to.

qubits: Optional, the physical qubits for the layer. Any couplings involving

qubits not in this list will be removed. If None the range up to the largest

qubit in the couplings will be used.

Returns:

The QuantumCircuit for the entangling layer.

"""

# Get qubits and convert to set to order

if qubits is None:

qubits = range(1 + max(coupling_qubits(couplings)))

qubits = set(qubits)

# Mapping of physical qubit to virtual qubit

qubit_mapping = {q: i for i, q in enumerate(qubits)}

# Convert couplings to indices for virtual qubits

indices = [

[qubit_mapping[i] for i in edge]

for edge in couplings

if qubits.issuperset(edge)

]

# Layer circuit on virtual qubits

circuit = QuantumCircuit(len(qubits))

# Get 2-qubit basis gate and pre and post rotation circuits

gate2q = None

pre = QuantumCircuit(2)

post = QuantumCircuit(2)

if gate_2q == "cx":

gate2q = CXGate()

# Pre-rotation

pre.sdg(0)

pre.z(1)

pre.sx(1)

pre.s(1)

# Post-rotation

post.sdg(1)

post.sxdg(1)

post.s(1)

elif gate_2q == "ecr":

gate2q = ECRGate()

# Pre-rotation

pre.z(0)

pre.s(1)

pre.sx(1)

pre.s(1)

# Post-rotation

post.x(0)

post.sdg(1)

post.sxdg(1)

post.s(1)

elif gate_2q == "cz":

gate2q = CZGate()

# Identity pre-rotation

# Post-rotation

post.sdg([0, 1])

else:

raise ValueError(

f"Invalid 2-qubit basis gate {gate_2q}, should be 'cx', 'cz', or 'ecr'"

)

# Add 1Q pre-rotations

for inds in indices:

circuit.compose(pre, qubits=inds, inplace=True)

# Use barriers around 2-qubit basis gate to specify a layer for PEA noise learning

circuit.barrier()

for inds in indices:

circuit.append(gate2q, (inds[0], inds[1]))

circuit.barrier()

# Add 1Q post-rotations after barrier

for inds in indices:

circuit.compose(post, qubits=inds, inplace=True)

# Add physical qubits as metadata

circuit.metadata["physical_qubits"] = tuple(qubits)

return circuit

def trotter_circuit(

theta: Parameter | float,

layer_couplings: Sequence[Sequence[tuple[int, int]]],

num_steps: int,

gate_2q: str | None = "cx",

backend: Backend | None = None,

qubits: Sequence[int] | None = None,

) -> QuantumCircuit:

"""Generate a Trotter circuit for the 2D Ising

Args:

theta: The angle parameter for X.

layer_couplings: A list of couplings for each entangling layer.

num_steps: the number of Trotter steps.

gate_2q: The 2-qubit basis gate to use in entangling layers.

Can be "cx", "cz", "ecr", or None if a backend is provided.

backend: A backend to get the 2-qubit basis gate from, if provided

will override the basis_gate field.

qubits: Optional, the allowed physical qubits to truncate the

couplings to. If None the range up to the largest

qubit in the couplings will be used.

Returns:

The Trotter circuit.

"""

if backend is not None:

try:

basis_gates = backend.configuration().basis_gates

except AttributeError:

basis_gates = backend.basis_gates

for gate in ["cx", "cz", "ecr"]:

if gate in basis_gates:

gate_2q = gate

break

# If no qubits, get the largest qubit from all layers and

# specify the range so the same one is used for all layers.

if qubits is None:

qubits = range(1 + max(coupling_qubits(layer_couplings)))

# Generate the entangling layers

layers = [

entangling_layer(gate_2q, couplings, qubits=qubits)

for couplings in layer_couplings

]

# Construct the circuit for a single Trotter step

num_qubits = len(qubits)

trotter_step = QuantumCircuit(num_qubits)

trotter_step.rx(theta, range(num_qubits))

for layer in layers:

trotter_step.compose(layer, range(num_qubits), inplace=True)

# Construct the circuit for the specified number of Trotter steps

circuit = QuantumCircuit(num_qubits)

for _ in range(num_steps):

circuit.rx(theta, range(num_qubits))

for layer in layers:

circuit.compose(layer, range(num_qubits), inplace=True)

circuit.metadata["physical_qubits"] = tuple(qubits)

return circuitDefine entangling layer couplings

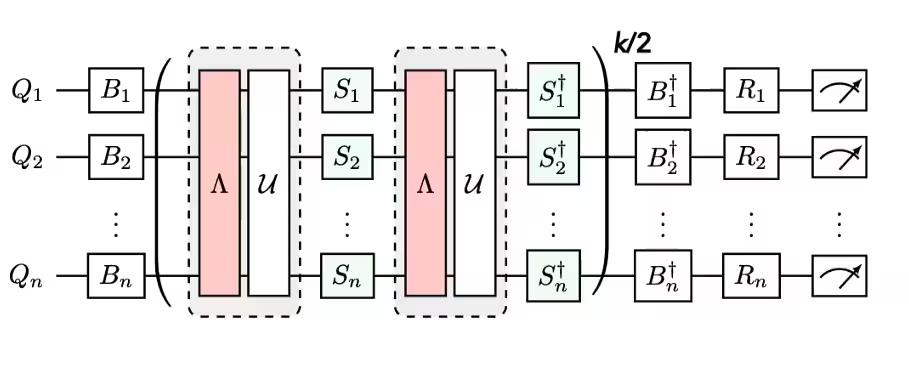

To implement the Trotterized Ising simulation, define three layers of two-qubit gate couplings for the device, to be repeated at each of the Trotter steps. These define the three twirled layers you need to learn the noise for to implement mitigation.

layer_couplings = construct_layer_couplings(backend)

for i, layer in enumerate(layer_couplings):

print(f"Layer {i}:\n{layer}\n")Output:

Layer 0:

[(2, 3), (4, 5), (6, 7), (8, 9), (10, 11), (12, 13), (14, 15), (16, 23), (18, 31), (19, 35), (20, 21), (25, 37), (26, 27), (28, 29), (33, 39), (36, 41), (38, 49), (42, 43), (45, 46), (47, 57), (51, 52), (53, 54), (56, 63), (58, 71), (59, 75), (61, 62), (64, 65), (66, 67), (68, 69), (72, 73), (76, 81), (79, 93), (82, 83), (84, 85), (86, 87), (88, 89), (91, 98), (94, 95), (97, 107), (99, 115), (100, 101), (102, 103), (105, 117), (108, 109), (110, 111), (113, 114), (116, 121), (118, 129), (123, 136), (124, 125), (126, 127), (130, 131), (132, 133), (135, 139), (138, 151), (142, 143), (144, 145), (146, 147), (152, 153), (154, 155)]

Layer 1:

[(0, 1), (3, 16), (5, 6), (7, 8), (11, 18), (13, 14), (17, 27), (21, 22), (23, 24), (25, 26), (29, 38), (30, 31), (32, 33), (34, 35), (39, 53), (41, 42), (43, 56), (44, 45), (47, 48), (49, 50), (51, 58), (54, 55), (57, 67), (60, 61), (62, 63), (65, 66), (69, 78), (70, 71), (73, 79), (74, 75), (77, 85), (80, 81), (83, 84), (87, 97), (89, 90), (91, 92), (93, 94), (96, 103), (101, 116), (104, 105), (106, 107), (109, 118), (111, 112), (113, 119), (114, 115), (117, 125), (121, 122), (123, 124), (127, 137), (128, 129), (131, 138), (133, 134), (136, 143), (139, 155), (140, 141), (145, 146), (147, 148), (149, 150), (151, 152)]

Layer 2:

[(1, 2), (3, 4), (7, 17), (9, 10), (11, 12), (15, 19), (21, 36), (22, 23), (24, 25), (27, 28), (29, 30), (31, 32), (33, 34), (37, 45), (40, 41), (43, 44), (46, 47), (48, 49), (50, 51), (52, 53), (55, 59), (61, 76), (63, 64), (65, 77), (67, 68), (69, 70), (71, 72), (73, 74), (78, 89), (81, 82), (83, 96), (85, 86), (87, 88), (90, 91), (92, 93), (95, 99), (98, 111), (101, 102), (103, 104), (105, 106), (107, 108), (109, 110), (112, 113), (119, 133), (120, 121), (122, 123), (125, 126), (127, 128), (129, 130), (131, 132), (134, 135), (137, 147), (141, 142), (143, 144), (148, 149), (150, 151), (153, 154)]

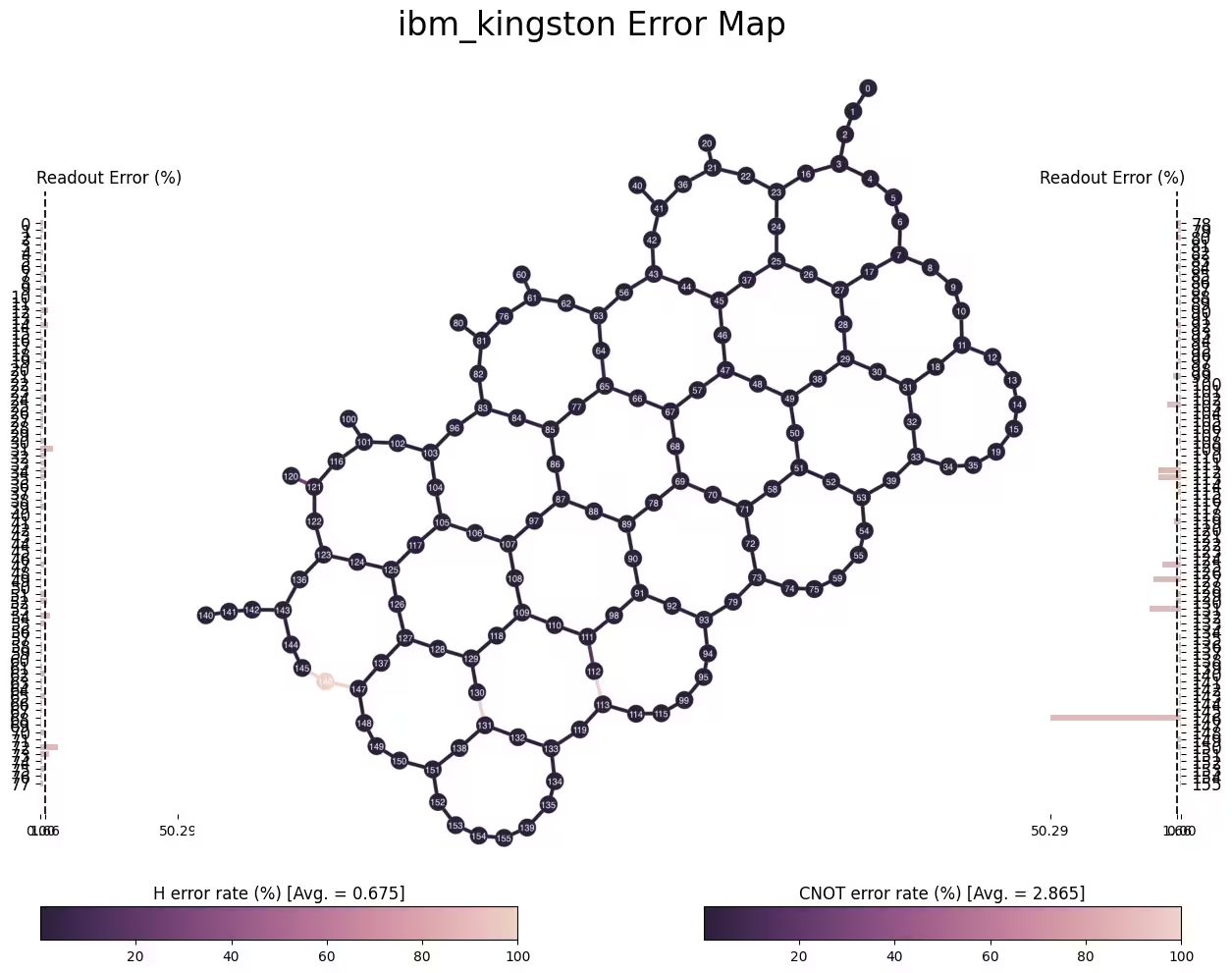

Remove bad qubits

Look at the coupling map for the backend and see if any qubits connect to couplings with high error. Remove these "bad" qubits from your experiment.

# Plot gate error map

# NOTE: These can change over time, so your results may look different

plot_error_map(backend)Output:

bad_qubits = {

56,

63,

67,

} # qubits removed based on high coupling error (1.00)

good_qubits = list(set(range(backend.num_qubits)).difference(bad_qubits))

print("Physical qubits:\n", good_qubits)Output:

Physical qubits:

[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 57, 58, 59, 60, 61, 62, 64, 65, 66, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155]

Main Trotter circuit generation

num_steps = 6

theta = Parameter("theta")

circuit = trotter_circuit(

theta, layer_couplings, num_steps, qubits=good_qubits, backend=backend

)Create a list of parameter values to be assigned later

num_params = 12

# 12 parameter values for Rx between [0, pi/2].

# Reshape to outer product broadcast with observables

parameter_values = np.linspace(0, np.pi / 2, num_params).reshape(

(num_params, 1)

)

num_params = parameter_values.sizeStep 2: Optimize problem for quantum hardware execution

ISA circuit

Before running the circuit on hardware, optimize it for hardware execution. This process involves a few steps:

- Pick a qubit layout that maps the virtual qubits of your circuit to physical qubits on the hardware.

- Insert swap gates as needed to route interactions between qubits that are not connected.

- Translate the gates in our circuit to Instruction Set Architecture (ISA) instructions that can directly be executed on the hardware.

- Perform circuit optimizations to minimize the circuit depth and gate count.

Although the transpiler built into Qiskit can perform all of these steps, this notebook demonstrates building the utility-scale Trotter circuit in a ground-up fashion. Select the good physical qubits and define entangling layers on connected qubit pairs from those selected qubits. Nonetheless, you still need to translate non-ISA gates in the circuit and avail any circuit optimization offered by the transpiler.

Transpile your circuit for the chosen backend by creating a pass manager and then running the pass manager on the circuit. Also, fix the initial layout of the circuit to the already selected good_qubits. An easy way to create a pass manager is to use the generate_preset_pass_manager function. See Transpile with pass managers for a more detailed explanation of transpiling with pass managers.

pm = generate_preset_pass_manager(

backend=backend,

initial_layout=good_qubits,

layout_method="trivial",

optimization_level=1,

)

isa_circuit = pm.run(circuit)ISA observables

Next, create all weight-1 observables for each virtual qubit by padding the necessary number of terms.

observables = []

num_qubits = len(good_qubits)

for q in range(num_qubits):

observables.append(

SparsePauliOp("I" * (num_qubits - q - 1) + "Z" + "I" * q)

)The transpilation process has mapped the virtual qubits of your circuit to physical qubits on the hardware. The information about the qubit layout is stored in the layout attribute of the transpiled circuit. Your observable is also defined in terms of the virtual qubits, so you need to apply this layout to the observable. This is done using the apply_layout method of SparsePauliOp.

Notice that each observable is wrapped in a list in the following code block. It is done to broadcast with parameter values so that each qubit observable is measured for each theta value. The broadcasting rules for primitives can be found here.

isa_observables = [

[obs.apply_layout(layout=isa_circuit.layout)] for obs in observables

]Step 3: Execute using Qiskit primitives

pub = (isa_circuit, isa_observables, parameter_values)Configure Estimator options

Next configure the Estimator options needed to run the mitigation experiment. This includes options for the noise learning of the entangling layers, and for ZNE extrapolation.

We use the following configuration:

# Experiment options

num_randomizations = 700

num_randomizations_learning = 40

max_batch_circuits = 3 * num_params

shots_per_randomization = 64

learning_pair_depths = [0, 1, 2, 4, 6, 12, 24]

noise_factors = [1, 1.3, 1.6]

extrapolated_noise_factors = np.linspace(0, max(noise_factors), 20)

# Base option formatting

options = {

# Builtin resilience settings for ZNE

"resilience": {

"measure_mitigation": True,

"zne_mitigation": True,

# TREX noise learning configuration

"measure_noise_learning": {

"num_randomizations": num_randomizations_learning,

"shots_per_randomization": 1024,

},

# PEA noise model configuration

"layer_noise_learning": {

"max_layers_to_learn": 3,

"layer_pair_depths": learning_pair_depths,

"shots_per_randomization": shots_per_randomization,

"num_randomizations": num_randomizations_learning,

},

"zne": {

"amplifier": "pea",

"noise_factors": noise_factors,

"extrapolator": ("exponential", "linear"),

"extrapolated_noise_factors": extrapolated_noise_factors.tolist(),

},

},

# Randomization configuration

"twirling": {

"num_randomizations": num_randomizations,

"shots_per_randomization": shots_per_randomization,

"strategy": "active-circuit",

},

# Optional Dynamical Decoupling (DD)

"dynamical_decoupling": {"enable": True, "sequence_type": "XY4"},

}Explanation of ZNE options

The following gives details on the additional options in the experimental branch. Note that these options and names are not finalized, and everything here is subject to change before an official release.

- amplifier: The method to use when amplifying noise to the intended noise factors.

Allowed values are

"gate_folding", which amplifies by repeating two-qubit basis gates, and"pea", which amplifies by probabilistic sampling after learning the Pauli-twirled noise model for layers of twirled two-qubit basis gates. There are also"gate_folding_front"and"gate_folding_back"options which are explained in the API documentation - extrapolated_noise_factors: Specify one or more noise factor values at which to evaluate the extrapolated models. If a sequence of values, the returned results will be array-valued with specified noise factor evaluated for the extrapolation model. A value of 0 corresponds to zero-noise extrapolation.

Run the experiment

estimator = Estimator(mode=backend, options=options)

job = estimator.run([pub])print(f"Job ID {job.job_id()}")Output:

Job ID d0mcsvik4jhc73afljrg

Step 4: Post-process and return result in desired classical format

Once the experiment is finished, you can view your results. You fetch the raw and mitigated expectation values and compare them with exact results. Then, plot the expectation values, both mitigated (extrapolated) and raw, averaged over all qubits for each parameter. Finally, plot expectation values for your choice of individual qubits.

primitive_result = job.result()General result shapes and metadata

The PrimitiveResult object contains a list-like structure named PubResult. As we submit only one PUB to the estimator, the PrimitiveResult contains a single PubResult object.

The PUB (primitive unified bloc) result expectation values and standard errors are array-valued. For estimator jobs with ZNE, there are several data fields of expectation values and standard errors available in the PubResult's DataBin container. We will breifly discuss the data fields for expectation values here (similar data fields are available for standard errors (stds) as well).

pub_result.data.evs: Expectation values corresponding to the zero noise (based on heuristically best extrapolation).- The first axis is the virtual qubit index for observable ( virtual-qubits/observables)

- The second axis indexes the parameter value for ( parameter values)

pub_result.data.evs_extrapolated: Expectation values for extrapolated noise factors for every extrapolator. This array has two additional axes.- The third axis indexes the extrapolation methods ( extrapolators,

exponentialandlinear) - The last axis indexes the

extrapolated_noise_factors( extrapolation points specifed in the option)

- The third axis indexes the extrapolation methods ( extrapolators,

pub_result.data.evs_noise_factors: Raw expectation values for each noise factor.- The third axis indexes the raw

noise_factors( factors)

- The third axis indexes the raw

pub_result = primitive_result[0]

print(

f"{pub_result.data.evs.shape=}\n"

f"{pub_result.data.evs_extrapolated.shape=}\n"

f"{pub_result.data.evs_noise_factors.shape=}\n"

)Output:

pub_result.data.evs.shape=(153, 12)

pub_result.data.evs_extrapolated.shape=(153, 12, 2, 20)

pub_result.data.evs_noise_factors.shape=(153, 12, 3)

Several metadata fields are also available in the PrimitiveResult. The metadata includes

resilience/zne/noise_factors: The raw noise factorsresilience/zne/extrapolator: The extrapolators used for each result

primitive_result.metadataOutput:

{'dynamical_decoupling': {'enable': True,

'sequence_type': 'XY4',

'extra_slack_distribution': 'middle',

'scheduling_method': 'alap'},

'twirling': {'enable_gates': True,

'enable_measure': True,

'num_randomizations': 700,

'shots_per_randomization': 64,

'interleave_randomizations': True,

'strategy': 'active-circuit'},

'resilience': {'measure_mitigation': True,

'zne_mitigation': True,

'pec_mitigation': False,

'zne': {'noise_factors': [1.0, 1.3, 1.6],

'extrapolator': ['exponential', 'linear'],

'extrapolated_noise_factors': [0.0,

0.08421052631578947,

0.16842105263157894,

0.25263157894736843,

0.3368421052631579,

0.42105263157894735,

0.5052631578947369,

0.5894736842105263,

0.6736842105263158,

0.7578947368421053,

0.8421052631578947,

0.9263157894736842,

1.0105263157894737,

1.0947368421052632,

1.1789473684210525,

1.263157894736842,

1.3473684210526315,

1.431578947368421,

1.5157894736842106,

1.6]},

'layer_noise_model': [LayerError(circuit=<qiskit.circuit.quantumcircuit.QuantumCircuit object at 0x168671910>, qubits=[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 57, 58, 59, 60, 61, 62, 64, 65, 66, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155], error=PauliLindbladError(generators=['IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...', ...], rates=[0.00023, 0.00022, 0.00011, 0.00042, 0.0, 0.0, 9e-05, 0.00019, 0.0, 0.0, 0.0, 0.0, 0.00018, 0.0, 0.0, 5e-05, 0.0, 0.0001, 6e-05, 0.00017, 5e-05, 0.0, 0.0, 0.00023, 1e-05, 5e-05, 0.0, 4e-05, 7e-05, 4e-05, 0.00032, 0.0001, 4e-05, 7e-05, 0.00021, 0.00029, 0.00021, 0.00023, 0.00015, 0.00011, 0.0, 7e-05, 1e-05, 4e-05, 0.00014, 0.0, 0.0, 0.00101, 3e-05, 0.0, 0.0, 7e-05, 2e-05, 7e-05, 0.0002, 0.00014, 7e-05, 2e-05, 0.00024, 0.00066, 0.00019, 0.00018, 7e-05, 0.0001, 2e-05, 2e-05, 0.0, 0.0, 7e-05, 0.0, 7e-05, 0.00057, 4e-05, 8e-05, 0.0, 7e-05, 5e-05, 3e-05, 0.00034, 7e-05, 3e-05, 5e-05, 0.00032, 0.00361, 0.00015, 0.00014, 1e-05, 0.00013, 0.0, 0.00012, 0.0, 0.0, 0.0, 0.0, 0.00021, 0.001, 0.0001, 0.0, 0.0, 0.00055, 0.0001, 0.0, 0.00123, 0.0009, 0.0, 0.0001, 0.00127, 0.00392, 0.00031, 2e-05, 0.00036, 0.0, 0.00018, 0.0, 0.0, 0.0, 0.0, 0.00014, 0.0001, 0.0, 0.0005, 0.00023, 0.0, 0.0008, 5e-05, 5e-05, 0.00093, 0.00067, 5e-05, 5e-05, 0.00085, 0.00051, 0.00011, 0.00025, 2e-05, 0.00034, 4e-05, 0.0, 0.0, 0.00019, 6e-05, 0.0, 0.0, 0.00019, 0.0, 8e-05, 0.0, 0.00022, 9e-05, 0.0, 0.00038, 0.00022, 0.0, 9e-05, 0.00037, 7e-05, 0.00038, 0.00025, 6e-05, 0.0, 0.00015, 0.0, 6e-05, 3e-05, 0.0, 0.00012, 0.0, 0.0001, 0.0, 1e-05, 4e-05, 0.00027, 0.00014, 0.0, 0.00029, 0.00016, 0.0, 0.00014, 0.00029, 0.00582, 0.00022, 0.00016, 0.0002, 2e-05, 2e-05, 4e-05, 0.0, 8e-05, 3e-05, 0.0, 0.0, 3e-05, 7e-05, 0.0, 0.00012, 0.00024, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00013, 0.00015, 0.00038, 0.00016, 0.0, 0.0, 0.00036, 0.0, 4e-05, 0.0, 0.00038, 0.0, 4e-05, 1e-05, 0.0006, 0.0, 0.0, 0.0, 0.00011, 2e-05, 0.0, 0.00012, 0.00022, 0.0, 1e-05, 0.0, 0.00029, 0.0, 0.00012, 0.0, 0.0001, 0.00016, 0.00046, 0.00019, 0.0002, 0.0, 0.00047, 0.00017, 0.0, 0.0002, 0.00051, 0.0014, 0.0001, 0.00016, 0.00016, 0.00029, 0.00015, 1e-05, 1e-05, 0.00029, 0.0, 0.00015, 0.0, 0.00032, 0.0, 0.0, 6e-05, 2e-05, 7e-05, 2e-05, 0.00026, 0.0, 2e-05, 0.00015, 6e-05, 2e-05, 7e-05, 0.00027, 1e-05, 3e-05, 5e-05, 0.0, 7e-05, 0.00011, 0.00015, 0.0, 1e-05, 4e-05, 0.00055, 2e-05, 5e-05, 0.0, 0.0002, 5e-05, 8e-05, 2e-05, 0.00109, 0.0, 0.0, 9e-05, 0.00189, 0.0, 0.00012, 1e-05, 0.00181, 0.00017, 0.0, 0.0, 0.00502, 0.0, 8e-05, 0.00019, 0.0, 0.0, 0.00035, 0.0, 0.0, 0.00013, 0.0, 0.00016, 0.00032, 0.0, 1e-05, 2e-05, 0.0, 2e-05, 0.0, 0.00018, 0.0001, 2e-05, 0.00023, 7e-05, 0.0, 9e-05, 0.00011, 2e-05, 0.0001, 0.00031, 0.00045, 4e-05, 2e-05, 0.0001, 0.00036, 0.00028, 0.0002, 0.00056, 6e-05, 0.0, 0.0, 0.00043, 0.0, 0.0, 6e-05, 0.00038, 0.0, 3e-05, 0.0001, 8e-05, 4e-05, 0.00016, 0.00032, 0.00011, 0.00016, 4e-05, 0.00034, 0.00103, 0.00063, 0.00049, 0.00018, 0.00094, 2e-05, 0.00011, 0.0, 0.00047, 0.0001, 0.0, 0.00016, 0.00136, 5e-05, 0.0, 0.0, 0.0, 0.0, 9e-05, 0.00051, 0.0, 0.00018, 9e-05, 0.0, 9e-05, 0.0, 0.0003, 0.00019, 0.0, 0.0, 0.00425, 0.0004, 0.00043, 0.00032, 0.0, 0.0, 0.00016, 0.00183, 0.0, 0.00012, 0.0, 0.00161, 0.00024, 0.0, 0.0, 0.00024, 0.0, 1e-05, 9e-05, 0.0, 0.0, 0.0002, 4e-05, 0.0, 5e-05, 8e-05, 8e-05, 9e-05, 2e-05, 7e-05, 4e-05, 0.00028, 0.0, 0.00011, 0.0, 0.00019, 0.00013, 4e-05, 0.0, 0.00015, 4e-05, 1e-05, 2e-05, 0.00015, 3e-05, 0.0, 0.00028, 0.0, 2e-05, 0.0001, 0.0, 0.0, 3e-05, 0.0001, 0.00011, 1e-05, 0.0, 0.00433, 0.00025, 0.00023, 0.00046, 0.0, 0.0, 6e-05, 9e-05, 0.00013, 0.0, 0.0, 7e-05, 0.0, 0.00018, 7e-05, 0.00026, 0.0, 0.0, 0.0, 5e-05, 7e-05, 0.0, 0.00029, 2e-05, 0.0, 7e-05, 0.00029, 0.00115, 0.00215, 0.00234, 0.00049, 0.00038, 0.0, 0.00012, 0.0, 0.00019, 5e-05, 0.0, 0.0001, 0.00048, 2e-05, 0.0, 0.0, 2e-05, 1e-05, 0.0001, 0.00022, 1e-05, 0.0001, 1e-05, 0.0002, 0.00033, 0.0004, 0.00036, 0.00022, 0.00068, 0.00095, 0.00373, 0.0003, 0.0, 0.0, 0.00056, 0.00014, 0.0, 1e-05, 0.00039, 0.0, 0.0, 0.0005, 0.0, 9e-05, 0.0, 0.0046, 0.00023, 0.00032, 0.00043, 0.0, 8e-05, 0.0, 0.00035, 9e-05, 0.0, 0.0, 0.00025, 0.0, 0.0, 7e-05, 0.00195, 3e-05, 2e-05, 0.0, 0.00043, 0.0, 0.00017, 0.00054, 0.00036, 0.00017, 0.0, 0.00054, 0.00424, 0.00044, 0.00032, 0.00014, 0.00021, 0.0, 4e-05, 0.0, 0.0002, 9e-05, 0.0, 0.0, 0.00019, 2e-05, 0.00014, 0.0, 0.0, 0.00024, 0.0, 0.0, 4e-05, 7e-05, 0.0, 0.0, 0.0, 0.0001, 0.0, 1e-05, 0.0, 0.00017, 0.01108, 0.0, 0.00016, 0.0, 6e-05, 8e-05, 0.0, 0.0003, 0.00016, 0.0, 0.0003, 1e-05, 0.0, 0.00016, 0.0002, 0.00042, 0.00026, 0.00031, 0.0003, 0.0, 0.0, 0.0, 0.00028, 0.00019, 0.0, 0.00018, 0.0, 0.00055, 0.0, 0.0, 0.0, 0.00061, 0.0, 0.0, 0.0, 0.00036, 1e-05, 6e-05, 0.0, 0.00047, 0.00029, 0.0, 6e-05, 0.00019, 5e-05, 6e-05, 0.00042, 5e-05, 4e-05, 3e-05, 0.0, 6e-05, 5e-05, 0.00036, 7e-05, 0.0, 0.00017, 0.0, 0.0005, 0.00035, 0.00031, 4e-05, 3e-05, 0.0, 0.0003, 0.0, 0.0, 2e-05, 0.0, 0.0001, 9e-05, 0.0, 0.00017, 0.0, 7e-05, 7e-05, 0.0001, 0.0, 0.0, 6e-05, 0.00015, 0.0, 0.0, 4e-05, 0.00353, 0.0, 9e-05, 0.0, 7e-05, 2e-05, 0.0, 0.00022, 0.00017, 0.0, 2e-05, 0.0003, 8e-05, 0.00039, 0.00025, 0.00059, 0.00028, 0.0, 0.00016, 0.00013, 0.00014, 0.0, 0.0, 0.00021, 0.00012, 0.0, 0.0, 0.0, 0.00013, 0.00021, 0.00327, 8e-05, 2e-05, 8e-05, 1e-05, 0.0, 0.00011, 3e-05, 0.00022, 0.0, 0.00023, 0.0, 0.0, 0.00022, 0.00017, 0.00053, 0.00072, 0.00068, 4e-05, 0.00028, 0.0, 1e-05, 0.00014, 0.00016, 1e-05, 0.00016, 4e-05, 0.00034, 0.00019, 0.0, 0.0, 0.00185, 0.00013, 0.0, 0.00186, 0.00218, 0.0, 0.00013, 0.00218, 0.00392, 0.00057, 0.00043, 0.00024, 0.00012, 8e-05, 0.0, 0.0, 0.0, 0.0, 3e-05, 8e-05, 0.00053, 0.00016, 3e-05, 0.0, 0.0, 0.0, 7e-05, 5e-05, 1e-05, 5e-05, 0.0001, 5e-05, 0.0, 0.0001, 0.0, 0.0, 0.00101, 0.00112, 0.00422, 1e-05, 0.0, 1e-05, 0.00013, 0.00045, 0.0, 0.0, 0.0, 0.00456, 0.0, 0.0, 0.0, 0.00057, 7e-05, 0.0, 0.00057, 0.00036, 0.0, 7e-05, 0.00036, 0.00175, 0.0005, 0.00055, 0.0004, 0.00032, 0.00016, 0.00094, 0.00041, 0.0, 0.00012, 0.00066, 0.00017, 0.00012, 0.0, 0.00063, 0.00595, 0.00032, 0.00016, 0.00077, 0.00057, 0.0001, 8e-05, 0.0, 0.00079, 0.0, 0.0, 0.00011, 0.00037, 1e-05, 0.00015, 7e-05, 0.00025, 0.00023, 0.00027, 0.00012, 9e-05, 0.0, 0.00046, 0.0, 0.0, 9e-05, 0.00035, 0.00168, 0.00025, 0.00023, 0.0004, 3e-05, 3e-05, 1e-05, 0.0001, 0.00012, 0.0, 0.0001, 1e-05, 0.0, 5e-05, 0.0, 0.00026, 0.0, 1e-05, 9e-05, 0.00031, 9e-05, 0.0, 0.0, 0.0, 9e-05, 1e-05, 0.0002, 0.0, 3e-05, 8e-05, 0.00019, 0.00021, 0.0001, 0.00018, 8e-05, 0.0, 3e-05, 9e-05, 0.00016, 0.0, 9e-05, 9e-05, 0.0, 5e-05, 0.0, 0.0, 5e-05, 5e-05, 0.0, 5e-05, 0.00012, 0.0, 0.00031, 0.0, 0.0, 0.00012, 0.00052, 0.00409, 0.00034, 0.00014, 0.00072, 0.00091, 0.00011, 0.0, 0.00012, 0.00043, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00027, 0.00033, 0.0, 5e-05, 3e-05, 4e-05, 3e-05, 4e-05, 0.0, 0.00023, 3e-05, 5e-05, 0.00041, 0.0, 0.0, 0.00017, 0.00611, 0.00012, 0.00021, 0.00031, 0.0, 6e-05, 0.0, 0.00024, 0.0, 4e-05, 0.00024, 0.00024, 0.00012, 6e-05, 2e-05, 0.00184, 0.00023, 0.0, 2e-05, 0.00029, 0.0, 0.0001, 0.0001, 0.0, 0.0, 0.0, 0.00015, 0.00018, 0.00014, 0.00013, 0.00011, 0.00133, 0.0, 0.00012, 0.0, 0.00087, 0.00011, 0.0, 0.00022, 0.0008, 0.00014, 0.00013, 0.00013, 0.0, 0.0, 0.0, 0.00031, 7e-05, 0.00012, 7e-05, 0.0, 0.00059, 0.0, 0.00024, 1e-05, 0.00042, 0.00029, 0.00017, 0.0, 7e-05, 0.00012, 0.00043, 0.0, 0.0, 0.00015, 6e-05, 0.00012, 7e-05, 0.00031, 0.0, 0.00018, 0.0, 0.0008, 0.00052, 0.00043, 0.00036, 1e-05, 3e-05, 0.0, 0.00027, 0.0, 0.0, 0.0, 0.0, 0.00014, 7e-05, 1e-05, 0.00012, 0.00014, 0.0, 0.0, 0.00012, 0.0, 9e-05, 0.00047, 0.0, 9e-05, 0.0, 0.00027, 0.00046, 0.00027, 0.0002, 0.00015, 0.00022, 0.0, 8e-05, 0.00019, 0.00017, 8e-05, 0.0, 1e-05, 0.00048, 1e-05, 0.00028, 0.0, 0.00141, 0.0, 0.0, 0.00025, 0.00016, 4e-05, 0.00208, 0.00073, 0.0, 0.00025, 0.00014, 4e-05, 0.00016, 0.00174, 0.00053, 0.0002, 0.0, 0.0, 0.00049, 0.00026, 0.00026, 0.0, 0.00011, 0.0, 0.00018, 1e-05, 0.00016, 0.0, 0.00011, 0.00023, 0.00016, 0.00062, 1e-05, 0.00037, 0.0001, 6e-05, 0.00045, 0.00017, 6e-05, 0.0001, 0.00042, 0.00058, 0.00027, 0.0003, 0.00049, 0.0002, 0.0, 4e-05, 0.0, 4e-05, 1e-05, 3e-05, 5e-05, 0.00089, 0.0, 0.0, 4e-05, 0.0, 0.0, 0.00014, 0.0, 0.0, 9e-05, 0.00027, 0.0, 0.0002, 0.0, 0.0, 9e-05, 0.0, 0.00021, 0.00014, 0.0, 3e-05, 0.0, 0.00024, 0.00013, 0.0003, 0.00016, 3e-05, 0.0, 0.0, 0.00031, 6e-05, 2e-05, 0.0, 0.00039, 5e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00159, 0.00012, 3e-05, 0.00026, 0.00087, 0.0, 1e-05, 9e-05, 0.00077, 0.00015, 0.0, 0.00018, 0.00094, 0.0, 0.0002, 0.0004, 0.00028, 0.0, 0.0, 0.00028, 0.0, 0.0, 0.0, 0.0002, 0.0, 0.0, 0.00033, 0.0, 0.0, 3e-05, 0.00015, 0.00028, 0.00028, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00044, 0.0, 0.00011, 0.00022, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00156, 0.00155, 0.0, 0.00038, 0.0, 0.0, 5e-05, 1e-05, 0.00014, 0.0, 7e-05, 0.00028, 8e-05, 0.0, 0.00011, 0.00023, 0.0, 0.00013, 0.0, 0.00019, 7e-05, 0.0, 3e-05, 0.00056, 0.0, 4e-05, 0.0, 0.00053, 0.00021, 0.00034, 0.00053, 0.00058, 0.00034, 0.00021, 0.00058, 0.00102, 1e-05, 0.00014, 0.00102, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00037, 0.00037, 0.00021, 0.00012, 5e-05, 0.0, 0.00037, 0.0, 0.0, 5e-05, 0.00024, 0.00028, 0.00037, 0.00037, 0.00013, 0.00022, 0.00011, 1e-05, 0.0, 0.00021, 0.0, 0.0, 0.00011, 0.00013, 8e-05, 0.0, 1e-05, 0.00029, 0.0, 8e-05, 0.0, 0.0, 0.0001, 0.00043, 0.00018, 5e-05, 9e-05, 3e-05, 0.0001, 0.0, 0.00041, 0.00012, 0.0, 0.0001, 9e-05, 0.00035, 0.00032, 0.00027, 0.00059, 1e-05, 6e-05, 0.0, 0.00024, 6e-05, 0.0, 0.0001, 0.00036, 0.0, 0.0, 0.0001, 0.00013, 0.0, 0.0, 0.00016, 0.00012, 3e-05, 7e-05, 0.0, 0.00011, 6e-05, 5e-05, 5e-05, 0.00058, 0.0, 8e-05, 0.0, 0.0004, 2e-05, 1e-05, 0.0001, 0.00043, 0.00011, 0.0, 0.0, 0.00031, 0.0, 3e-05, 0.00032, 0.0, 0.0, 1e-05, 0.0002, 3e-05, 0.0, 0.00023, 0.0, 0.0, 0.0, 0.0, 0.00037, 0.00028, 3e-05, 0.0, 0.0, 1e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00091, 0.0, 3e-05, 8e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00102, 0.00091, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00351, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.42262, 0.0, 0.19471, 0.0, 0.8064, 0.0, 0.57953, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.72255, 0.0, 0.61733, 0.56765, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.25836, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.26103, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.48452, 0.00018, 7e-05, 0.0, 2e-05, 6e-05, 0.0, 0.0002, 0.0, 0.00056, 0.0, 5e-05, 0.0, 0.00025, 3e-05, 0.0, 0.0003, 8e-05, 0.0, 3e-05, 0.00014, 0.00024, 0.00042, 0.0003, 6e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.28441, 0.0, 0.0, 0.0, 0.07122, 0.0, 0.0, 0.0, 0.36139, 0.0, 0.0, 0.0, 0.00067, 0.00072, 0.00012, 0.00431, 0.0, 0.0, 0.00505, 0.0, 0.0, 0.0004, 0.00379, 0.0, 0.0, 0.00437, 0.0, 0.0, 0.00017, 0.00169, 0.00027, 0.00025, 0.0005, 2e-05, 0.00016, 0.0, 0.00051, 0.0, 0.0, 0.00014, 0.0, 0.0, 0.00015, 0.0002, 0.0, 0.00034, 0.00027, 0.0, 8e-05, 0.00016, 0.0, 6e-05, 0.0, 0.0001, 1e-05, 0.00015, 0.0, 8e-05, 0.0, 2e-05, 0.00013, 8e-05, 0.0, 0.0, 0.00014, 0.0, 0.0, 2e-05, 0.00053, 0.0, 0.0, 5e-05, 0.0, 5e-05, 0.0, 0.00013, 4e-05, 0.0, 0.00037, 0.0, 0.0, 6e-05, 0.00011, 0.0, 4e-05, 0.00034, 0.0, 0.0, 0.0, 0.00015, 0.00021, 0.00017, 0.00036, 0.00015, 6e-05, 7e-05, 9e-05, 0.0, 1e-05, 6e-05, 0.0, 0.0, 0.00011, 0.00012, 5e-05, 0.00059, 4e-05, 0.00029, 0.00059, 0.00055, 0.00029, 4e-05, 0.00055, 0.00048, 0.00037, 7e-05, 0.00039, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.35497, 0.10255, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.00343, 0.0, 0.0, 1.00343, 0.0, 0.0, 0.0, 0.0, 1.79398, 0.45751, 0.0, 2.48969, 0.0, 0.0, 0.0, 0.0, 0.2536, 0.0, 0.0, 0.0, 0.58887, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.2536, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00105, 0.0, 0.0, 0.0, 0.00092, 0.0, 0.0, 0.0, 0.00212, 0.0, 0.0, 0.0, 0.00064, 0.00028, 0.00014, 0.00065, 0.0004, 0.00014, 0.00028, 0.0004, 0.00087, 0.00041, 0.00017, 0.00044, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.14893, 0.0, 0.0, 0.0, 0.56032, 0.0, 0.0, 0.0, 0.0, 0.00051, 0.00048, 0.0, 0.0, 0.00048, 0.00051, 0.0, 0.0, 0.00105, 0.00092, 0.00045, 0.00023, 0.0001, 0.0, 0.00031, 6e-05, 3e-05, 0.00011, 0.00021, 0.0, 0.00012, 3e-05, 8e-05, 8e-05, 3e-05, 2e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00172, 0.00023, 0.0002, 0.00015, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.65411, 0.0, 0.0, 0.83803, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.58306, 0.0, 0.42915, 0.0, 0.0, 0.0, 0.0, 0.0, 1.86157, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.51166, 0.0, 0.0, 0.0, 0.0, 0.0, 0.51166, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.01221, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0006, 0.0, 0.0, 0.0, 0.00052, 0.0, 0.0, 0.0, 0.0015, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.14893, 0.03192, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0025, 0.0, 0.0, 0.0, 0.00182, 0.0, 0.0, 0.00032, 0.00263, 0.0, 0.0, 0.00024, 0.00736, 0.0, 0.0, 0.0, 2e-05, 0.0, 0.0, 0.0, 0.0, 0.00015, 2e-05, 6e-05, 0.0, 0.0, 0.0, 0.00015, 0.0, 6e-05, 0.00366, 0.0, 0.0, 0.0, 0.00213, 0.00288, 0.0]))),

LayerError(circuit=<qiskit.circuit.quantumcircuit.QuantumCircuit object at 0x169b1da90>, qubits=[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 57, 58, 59, 60, 61, 62, 64, 65, 66, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155], error=PauliLindbladError(generators=['IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...', ...], rates=[0.00023, 0.00024, 0.0002, 0.00015, 2e-05, 0.0, 0.00017, 0.00014, 0.0, 2e-05, 0.00019, 9e-05, 0.00023, 0.00024, 3e-05, 0.00012, 2e-05, 0.0, 0.0, 0.0002, 0.0, 4e-05, 0.0, 0.0001, 0.0, 2e-05, 0.0, 0.00023, 9e-05, 0.0, 0.0, 0.00029, 0.0, 1e-05, 3e-05, 0.00029, 0.0, 4e-05, 2e-05, 0.0002, 0.00012, 0.0, 0.0, 0.00022, 0.0, 0.0, 0.0001, 0.00036, 5e-05, 2e-05, 3e-05, 0.00012, 7e-05, 0.0, 0.0, 7e-05, 0.0, 0.0001, 0.0, 0.0057, 0.0, 0.0, 3e-05, 0.0001, 0.00012, 0.0, 0.00014, 0.00014, 0.0, 0.00012, 0.00019, 0.00049, 0.00019, 0.00017, 0.0, 0.00021, 4e-05, 5e-05, 0.00013, 0.00018, 0.0, 0.0, 0.0, 0.00523, 0.0, 0.0, 0.00013, 1e-05, 0.00014, 0.0, 0.00028, 0.0, 0.0, 0.00014, 0.00019, 3e-05, 0.00057, 0.0002, 0.00052, 0.00144, 0.0, 0.0, 5e-05, 0.00099, 0.00028, 1e-05, 2e-05, 0.00158, 0.0, 0.00018, 0.0, 0.00018, 5e-05, 6e-05, 1e-05, 3e-05, 0.0, 3e-05, 0.00014, 0.00034, 0.0, 0.0, 0.00019, 0.00023, 0.0, 3e-05, 0.0, 0.0, 1e-05, 6e-05, 0.0, 0.00103, 0.0, 0.0, 0.00012, 0.00045, 0.0, 5e-05, 0.0, 0.00037, 2e-05, 0.0, 5e-05, 0.00014, 4e-05, 0.0, 0.0, 0.0, 0.00011, 0.0, 8e-05, 6e-05, 6e-05, 2e-05, 0.0, 0.00071, 0.0, 5e-05, 0.0, 0.0001, 0.00012, 0.0, 0.00021, 0.00016, 0.0, 0.00012, 0.00031, 2e-05, 0.00019, 0.00014, 0.00021, 0.00014, 0.00011, 0.0, 9e-05, 0.00012, 0.0, 0.00011, 0.0, 0.00018, 0.0, 3e-05, 0.0, 9e-05, 6e-05, 0.0, 0.00015, 0.00025, 0.0, 6e-05, 0.00025, 0.00037, 0.00049, 0.00035, 0.0001, 0.0, 2e-05, 0.0, 0.00014, 0.0002, 0.0, 2e-05, 0.0, 0.00022, 0.00012, 0.0, 6e-05, 0.00024, 1e-05, 0.00015, 0.00043, 0.00018, 0.00015, 1e-05, 0.00042, 0.00048, 0.00031, 0.00013, 0.0002, 0.00038, 3e-05, 7e-05, 3e-05, 0.00033, 0.0, 9e-05, 0.0, 0.00011, 0.0, 0.0, 5e-05, 8e-05, 0.00039, 0.00046, 0.00386, 0.00029, 3e-05, 0.0, 0.00258, 0.0003, 0.0, 0.0, 0.00242, 0.0, 0.0, 0.0, 0.00012, 0.0, 0.00039, 0.00028, 0.00047, 0.00039, 0.0, 0.00065, 0.0, 0.00431, 0.00316, 7e-05, 0.0, 1e-05, 0.0, 3e-05, 0.0, 0.00018, 0.00014, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0002, 6e-05, 4e-05, 0.0, 0.0, 0.00016, 0.0, 4e-05, 0.00027, 0.0, 4e-05, 0.00036, 0.00016, 4e-05, 0.0, 0.00034, 0.00059, 0.00034, 0.00014, 0.00017, 0.0, 0.0, 0.0, 0.00011, 6e-05, 8e-05, 6e-05, 0.0, 0.00052, 1e-05, 0.00011, 0.0, 0.0001, 3e-05, 3e-05, 0.00024, 0.00011, 3e-05, 3e-05, 0.00024, 0.00011, 0.00034, 0.00028, 3e-05, 0.00018, 1e-05, 9e-05, 0.00026, 0.0, 0.0, 4e-05, 2e-05, 9e-05, 1e-05, 0.00038, 0.00013, 0.0, 8e-05, 0.00044, 0.00014, 0.00024, 0.00014, 0.0, 0.00012, 1e-05, 0.00081, 4e-05, 0.00015, 7e-05, 0.00086, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2e-05, 0.00025, 9e-05, 0.00022, 0.0, 0.0, 0.00284, 0.0, 0.0, 0.00024, 0.0001, 8e-05, 0.0, 4e-05, 0.0, 0.0, 8e-05, 0.00013, 0.00078, 0.00025, 0.0001, 3e-05, 3e-05, 0.00015, 0.0002, 0.0, 0.00011, 0.00016, 0.00066, 0.00017, 4e-05, 0.0, 0.0, 0.00016, 0.00011, 0.00044, 0.00846, 0.0, 4e-05, 0.0, 0.00022, 0.00021, 3e-05, 0.0005, 0.00029, 0.0, 0.0, 0.0002, 0.0, 0.00012, 0.00027, 0.00071, 0.0, 0.00011, 0.0, 6e-05, 0.00023, 0.0, 0.00026, 0.00012, 0.0, 0.00023, 0.00036, 0.00327, 0.0008, 0.0006, 0.00042, 7e-05, 6e-05, 0.0, 5e-05, 0.0001, 7e-05, 4e-05, 0.0, 6e-05, 0.0, 0.00011, 0.0, 0.0002, 0.0, 5e-05, 1e-05, 0.0, 5e-05, 0.00027, 0.00014, 8e-05, 0.0, 0.0, 5e-05, 0.0, 0.00022, 8e-05, 0.0, 0.0, 7e-05, 0.00018, 0.00022, 6e-05, 3e-05, 0.00013, 0.00028, 0.0, 0.00061, 0.0, 0.0, 0.0, 0.00025, 0.0, 0.0, 0.0, 0.00038, 0.0, 0.0, 0.0, 0.00031, 0.0, 0.0, 6e-05, 0.00069, 0.00025, 6e-05, 3e-05, 0.00011, 0.0, 8e-05, 0.00024, 5e-05, 8e-05, 0.0, 0.00023, 0.00011, 0.00059, 0.0005, 0.0002, 0.0, 8e-05, 0.0, 0.00013, 0.0, 0.0, 9e-05, 0.0, 0.00062, 0.0, 0.0, 0.0, 0.00034, 0.00078, 0.00241, 0.00028, 0.0, 0.00015, 6e-05, 0.0, 5e-05, 0.0, 0.00034, 7e-05, 0.0, 3e-05, 3e-05, 7e-05, 0.0, 0.00256, 0.0, 1e-05, 0.00014, 4e-05, 0.0, 0.00014, 0.00017, 0.0, 0.00011, 0.00022, 0.00012, 0.00011, 0.0, 0.00038, 0.00117, 0.00053, 0.00054, 0.0002, 0.00065, 0.0, 0.0, 0.0, 0.0009, 5e-05, 0.0, 0.0, 0.00278, 0.0, 0.00026, 0.0, 5e-05, 0.0, 4e-05, 0.00019, 0.00015, 4e-05, 0.0, 2e-05, 0.00038, 1e-05, 0.0, 0.0, 0.00012, 0.0, 5e-05, 0.0, 0.0, 5e-05, 0.00019, 0.0, 0.0, 0.0, 7e-05, 5e-05, 0.0, 0.0002, 0.00067, 4e-05, 1e-05, 0.0, 0.00028, 0.00021, 3e-05, 0.00029, 0.0, 5e-05, 0.0001, 7e-05, 2e-05, 0.0, 0.0, 0.00033, 0.0, 9e-05, 0.0, 0.00015, 9e-05, 0.0, 0.0, 0.0, 0.0, 0.00012, 7e-05, 0.00463, 0.0, 0.00011, 0.0, 0.00012, 0.00012, 0.0, 0.00022, 8e-05, 0.0, 0.00012, 0.0002, 0.0005, 0.00043, 0.00034, 0.00063, 0.00041, 0.00014, 0.0, 0.0, 0.0001, 1e-05, 0.0, 0.00038, 0.0, 9e-05, 0.00015, 0.0, 3e-05, 1e-05, 0.00057, 0.0, 9e-05, 0.00036, 0.0, 8e-05, 0.00016, 3e-05, 0.00018, 4e-05, 0.00024, 0.00017, 4e-05, 0.00018, 0.00034, 0.00022, 0.00067, 0.00067, 0.00038, 5e-05, 0.00021, 0.0, 0.0, 0.0, 0.0, 9e-05, 0.00017, 0.00015, 0.0, 8e-05, 7e-05, 0.0, 1e-05, 0.0, 0.0, 8e-05, 0.0, 0.00015, 4e-05, 0.00039, 7e-05, 1e-05, 6e-05, 0.0, 0.0, 0.00012, 0.00036, 0.00016, 0.00016, 0.0, 0.0, 0.00012, 0.0, 0.00019, 0.0, 0.0, 3e-05, 0.012, 0.00011, 0.00013, 0.00021, 0.00023, 9e-05, 4e-05, 0.00025, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 9e-05, 0.00037, 0.0, 0.00026, 0.0, 0.00015, 0.0, 0.0, 0.00025, 8e-05, 0.0, 0.0, 0.00023, 0.0, 0.00027, 5e-05, 0.00059, 0.00037, 0.0001, 0.0, 0.00016, 0.0003, 0.0, 0.00011, 4e-05, 0.00033, 0.0001, 5e-05, 0.0, 0.00017, 0.00016, 0.0, 0.00018, 4e-05, 0.0, 0.00016, 0.00013, 0.00093, 0.00036, 0.0004, 0.0002, 0.00017, 0.0, 0.00012, 0.0, 0.00022, 8e-05, 1e-05, 0.0, 0.0, 1e-05, 2e-05, 6e-05, 0.00034, 0.00051, 0.00274, 0.0, 7e-05, 0.0, 0.00036, 0.00032, 0.0, 7e-05, 0.00053, 0.00731, 0.00034, 0.00051, 0.00117, 0.00059, 0.0, 3e-05, 0.00013, 0.00072, 0.0001, 5e-05, 0.0, 0.00092, 0.0002, 0.0, 0.00026, 0.00028, 0.00037, 0.00024, 5e-05, 0.0, 0.0, 0.00018, 0.0, 3e-05, 2e-05, 0.0, 5e-05, 0.0, 0.0, 0.0, 0.00028, 5e-05, 7e-05, 0.00028, 0.00036, 7e-05, 5e-05, 0.00036, 0.00026, 0.00045, 0.00024, 0.00019, 0.00069, 0.00045, 0.00035, 0.0, 0.0, 8e-05, 7e-05, 3e-05, 9e-05, 0.0, 0.0, 0.00344, 0.0, 0.0, 0.00021, 0.00012, 8e-05, 6e-05, 0.0, 0.0, 2e-05, 0.0, 0.00016, 0.00024, 1e-05, 0.0, 8e-05, 6e-05, 0.0, 5e-05, 0.0, 0.00015, 0.0, 0.00021, 0.00013, 0.0, 0.0, 6e-05, 0.0, 0.00015, 0.00042, 0.00153, 0.0, 3e-05, 2e-05, 0.00029, 0.00013, 0.00029, 0.00033, 0.0, 0.0, 0.0, 0.00045, 6e-05, 5e-05, 2e-05, 0.00036, 3e-05, 0.00017, 0.00019, 0.00035, 1e-05, 0.00018, 3e-05, 0.00012, 0.00019, 9e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00013, 4e-05, 9e-05, 0.0, 6e-05, 0.0, 0.00024, 0.0, 0.00023, 0.00018, 0.0, 0.0, 0.00013, 0.0, 0.0001, 0.0, 1e-05, 3e-05, 0.00022, 7e-05, 6e-05, 0.0, 0.0, 3e-05, 1e-05, 0.00013, 0.00014, 0.0, 0.0, 8e-05, 0.00026, 0.0003, 0.00026, 0.00093, 4e-05, 0.0, 7e-05, 0.00102, 0.0, 0.00013, 0.0, 0.00105, 0.00017, 0.0, 0.00023, 0.00015, 0.0001, 0.0, 0.0, 6e-05, 0.0, 0.0, 0.0002, 0.00011, 0.00013, 0.0002, 3e-05, 0.00171, 0.00014, 0.0002, 0.00187, 0.0012, 0.0002, 0.00014, 0.00136, 0.00062, 0.00025, 0.00018, 0.00041, 0.00014, 0.00014, 0.00017, 0.00014, 0.0002, 0.00017, 0.00014, 0.0002, 0.00061, 0.0, 0.0, 2e-05, 0.0002, 0.00017, 0.00027, 2e-05, 5e-05, 0.0, 0.0, 0.0, 0.00012, 0.00021, 0.0, 8e-05, 1e-05, 1e-05, 0.00012, 0.0, 0.00021, 3e-05, 0.0, 7e-05, 3e-05, 0.0002, 0.00017, 0.00021, 0.00021, 1e-05, 9e-05, 0.0, 0.00019, 2e-05, 3e-05, 1e-05, 0.0, 0.0001, 0.0, 0.00017, 1e-05, 0.0, 0.0, 0.00014, 0.00019, 0.0, 0.0, 0.0, 0.00041, 0.0, 5e-05, 0.0, 0.00042, 0.0, 0.00011, 0.00042, 0.00022, 0.00011, 0.0, 0.00041, 9e-05, 0.0004, 0.00045, 0.00028, 0.00119, 0.00015, 0.0, 0.00135, 0.0, 4e-05, 0.0, 0.0006, 0.0, 0.00015, 0.00101, 0.0, 0.0, 0.00013, 0.00359, 0.00025, 0.00025, 0.00015, 0.0, 0.00014, 0.00019, 0.00031, 5e-05, 0.0, 7e-05, 0.00019, 6e-05, 0.00015, 0.0, 0.00035, 0.0, 0.00012, 8e-05, 0.0, 0.0, 0.0, 0.0001, 0.0, 0.0, 6e-05, 0.0, 0.00024, 6e-05, 0.0, 0.00015, 0.00041, 9e-05, 5e-05, 0.00013, 0.00044, 0.0, 5e-05, 6e-05, 0.00037, 0.00019, 0.00014, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00155, 0.00016, 0.00016, 0.0002, 0.00016, 0.00015, 0.00018, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0026, 0.0, 0.0, 0.0, 0.00259, 0.0, 0.0, 0.0, 0.0, 0.00027, 0.0, 0.0, 4e-05, 0.00018, 0.0, 0.0, 8e-05, 0.00033, 0.0, 0.0, 0.00019, 8e-05, 0.0, 0.00012, 2e-05, 0.0001, 3e-05, 7e-05, 0.0001, 5e-05, 0.00022, 8e-05, 0.00022, 0.00023, 6e-05, 1e-05, 0.0003, 0.00017, 1e-05, 6e-05, 0.00022, 0.00014, 0.00036, 0.00027, 0.0001, 6.51443, 0.52125, 0.52158, 0.78271, 6.17405, 0.18049, 0.18064, 0.44206, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00074, 0.00051, 0.00027, 0.0, 0.0, 3e-05, 7e-05, 0.00011, 5e-05, 1e-05, 0.0, 0.0, 0.0, 1e-05, 0.00011, 0.00024, 0.0, 0.0, 0.0, 3e-05, 0.00011, 8e-05, 0.0, 0.0002, 5e-05, 0.0, 0.00014, 0.0, 0.00013, 6e-05, 0.00042, 1e-05, 0.0001, 0.00011, 1e-05, 6e-05, 0.00013, 0.0004, 0.00012, 8e-05, 7e-05, 0.0007, 0.00021, 0.00031, 0.00022, 6e-05, 3e-05, 3e-05, 0.00032, 4e-05, 5e-05, 0.0, 0.00024, 0.0, 7e-05, 0.0, 0.00017, 0.00016, 0.0, 0.0, 0.00013, 0.0, 0.0, 0.00044, 0.0003, 0.0, 0.0, 0.00039, 0.0002, 0.00041, 0.00031, 0.00019, 0.00021, 0.00013, 1e-05, 0.0, 0.00018, 0.0, 0.0, 0.00021, 4e-05, 8e-05, 7e-05, 8e-05, 0.0001, 8e-05, 0.0, 3e-05, 9e-05, 4e-05, 0.00045, 0.0, 0.0, 5e-05, 0.00011, 4e-05, 9e-05, 0.0005, 0.0, 0.0, 0.0, 2e-05, 0.00036, 0.00039, 6e-05, 0.0007, 5e-05, 8e-05, 8e-05, 0.00045, 0.00012, 0.00013, 0.00013, 0.00048, 7e-05, 0.00015, 4e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.4467, 0.52508, 0.60915, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 11.73577, 0.0, 0.0, 0.0, 0.03059, 0.1465, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.49162, 0.0, 1.32443, 1.06941, 0.00783, 0.20731, 0.0, 0.90422, 0.37165, 0.21968, 0.0, 0.12518, 0.0, 0.0, 0.0, 0.03519, 0.0, 0.0, 0.0, 0.33613, 0.0, 0.0, 0.0, 2.53328, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.04769, 0.04771, 0.0, 0.0, 0.0, 0.0, 0.0, 0.04771, 0.04769, 0.0, 0.0, 0.0, 0.0, 0.0, 0.03519, 0.33613, 0.73628, 0.0, 6e-05, 4e-05, 0.00017, 0.0, 4e-05, 6e-05, 0.00024, 0.00014, 0.00026, 0.00026, 0.00017, 9e-05, 7e-05, 0.0, 0.0, 6e-05, 0.0, 0.0, 5e-05, 0.00033, 3e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0106, 0.0, 0.0, 0.02846, 0.0, 0.0, 0.0, 0.0, 0.0, 1.28455, 1.49555, 0.86131, 0.00017, 0.00109, 0.00015, 0.00414, 0.00023, 0.0, 0.0, 0.0003, 0.0, 0.0001, 0.00456, 5e-05, 0.0, 0.0, 8e-05, 0.0, 0.0, 0.00042, 0.0, 0.0002, 0.00019, 0.0, 0.00023, 0.00016, 7e-05, 8e-05, 8e-05, 0.0002, 0.0001, 8e-05, 8e-05, 0.00033, 0.00024, 0.0048, 0.00472, 0.00032, 0.00047, 0.0, 0.00014, 0.00011, 0.00021, 0.00013, 8e-05, 1e-05, 0.00457, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00017, 0.0002, 0.0, 0.00017, 0.0, 4e-05, 0.0, 0.00099, 0.00053, 0.00067, 0.0002, 0.00025, 0.0, 0.00033, 0.00013, 0.0, 0.0, 0.00023, 0.0, 0.00025, 0.00035, 2e-05, 0.0001, 0.0, 0.00023, 0.00016, 0.0001, 0.00042, 0.0, 0.00013, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.03387, 0.0, 0.0, 0.07022, 0.0, 0.0, 0.14041, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 4.36897, 0.0, 0.0, 0.02453, 0.0, 0.0, 0.0, 0.0, 0.0, 0.47746, 0.0, 0.0, 2.37857, 3.29398, 0.0, 0.0, 0.51162, 0.0, 0.0, 0.0, 0.48045, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.1305, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0023, 0.0, 0.0, 0.0, 7e-05, 0.0, 0.0, 0.00036, 0.00029, 0.0, 0.0, 0.0003, 3e-05, 0.0, 0.0, 0.00037, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00226, 0.00027, 0.0001, 0.00022, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1.94009, 0.0, 0.00012, 6e-05, 0.0, 0.0, 8e-05, 0.0, 0.00011, 0.0, 5e-05, 5e-05, 0.0, 3e-05, 5e-05, 0.00035, 0.0, 0.0, 0.0, 0.0001, 0.0, 0.0, 0.0, 0.0001, 0.0, 3e-05, 5e-05, 0.0, 0.00058, 0.00018, 0.00016, 0.00022, 0.00062, 0.00016, 0.00024, 0.00016, 0.0006, 0.0002, 0.00019, 0.00022, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00668, 0.01572, 0.01389, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.85755, 0.15667, 0.0, 0.85755, 2.2648, 0.0, 0.15667, 2.43473, 0.11756, 0.01455, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.17147, 0.09399, 0.06359, 0.06351, 0.19824, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.16993, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.49282, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00273, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00078, 0.0, 0.0, 0.0, 0.00033, 0.0, 0.0, 0.00046, 0.0, 0.0, 0.0, 0.0, 0.00029, 0.0, 0.0, 0.00053, 0.00118, 0.0, 0.00043, 0.0, 0.00202, 0.00011, 0.0, 5e-05, 0.0465, 0.0, 0.00036, 0.0, 0.00019, 0.0, 0.0, 0.00027, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 7e-05, 0.0, 0.00012, 0.00057, 8e-05, 0.00023, 0.00027, 0.0, 0.0, 0.0]))),

LayerError(circuit=<qiskit.circuit.quantumcircuit.QuantumCircuit object at 0x1681dd610>, qubits=[0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 57, 58, 59, 60, 61, 62, 64, 65, 66, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155], error=PauliLindbladError(generators=['IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...',

'IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII...', ...], rates=[0.00038, 0.00048, 0.0002, 0.00022, 3e-05, 1e-05, 0.0, 0.00013, 9e-05, 0.0, 1e-05, 0.0001, 0.0, 3e-05, 4e-05, 0.00014, 5e-05, 5e-05, 0.00024, 5e-05, 5e-05, 5e-05, 0.00015, 0.00023, 0.00026, 0.00023, 0.00012, 0.0005, 0.0, 2e-05, 0.0, 0.00036, 9e-05, 1e-05, 1e-05, 0.00045, 0.0, 6e-05, 6e-05, 8e-05, 0.0, 0.00011, 0.00018, 7e-05, 0.00011, 0.0, 0.00014, 0.00053, 0.00057, 0.00067, 0.00012, 0.0001, 4e-05, 7e-05, 0.00012, 0.00036, 4e-05, 0.0, 0.0, 0.00027, 2e-05, 0.0, 0.00014, 0.00066, 0.0, 0.00012, 0.0, 0.00064, 0.0, 5e-05, 1e-05, 0.00027, 0.00015, 0.0, 4e-05, 4e-05, 0.0, 0.0, 7e-05, 0.0, 0.00015, 0.0, 0.0, 0.00019, 0.0, 5e-05, 6e-05, 0.00044, 0.0, 6e-05, 0.0, 0.00041, 0.00014, 0.0, 0.0, 0.00012, 2e-05, 0.0, 3e-05, 0.00081, 0.0, 6e-05, 0.0, 0.00088, 2e-05, 0.0, 0.0, 0.0006, 0.0, 0.0, 0.00014, 0.00018, 4e-05, 6e-05, 0.00025, 0.0, 6e-05, 4e-05, 7e-05, 0.0003, 0.00088, 0.00091, 0.00019, 0.0, 0.0, 0.00013, 2e-05, 0.00028, 3e-05, 0.0, 3e-05, 0.00331, 0.0, 4e-05, 1e-05, 8e-05, 0.0, 0.00026, 0.00033, 0.0, 0.00026, 0.0, 0.0, 0.0, 0.00043, 0.00034, 0.00075, 0.00041, 0.0, 7e-05, 0.0, 0.00026, 5e-05, 2e-05, 0.0, 4e-05, 0.00015, 0.0, 6e-05, 0.00042, 1e-05, 5e-05, 0.0, 0.00041, 0.0, 0.0, 7e-05, 3e-05, 0.0, 8e-05, 0.0, 0.00025, 0.0, 0.0, 0.0, 0.0, 0.00018, 0.0, 9e-05, 0.00113, 0.0, 8e-05, 0.0, 0.00029, 0.0, 0.00019, 0.0, 0.00036, 5e-05, 0.0, 0.0, 0.00032, 0.0, 0.0, 0.0001, 0.00019, 3e-05, 9e-05, 0.00034, 0.00016, 9e-05, 3e-05, 0.00022, 0.00028, 0.00028, 0.00019, 0.00016, 0.00067, 0.0, 0.0, 0.0, 0.00053, 0.00018, 0.0, 0.00017, 0.00041, 0.0001, 0.0, 0.0, 0.00011, 0.0, 9e-05, 0.00023, 0.00025, 9e-05, 0.0, 0.00026, 0.00011, 0.00026, 0.00027, 8e-05, 0.00054, 0.00034, 0.00045, 0.00066, 0.0, 0.0, 3e-05, 0.00041, 1e-05, 0.00013, 3e-05, 0.00271, 0.0, 0.0, 6e-05, 0.00022, 6e-05, 0.0, 0.0001, 0.00011, 0.0, 0.00011, 0.0, 0.00045, 1e-05, 0.0, 7e-05, 0.0, 1e-05, 0.0, 0.0002, 0.0, 9e-05, 0.00029, 2e-05, 0.00011, 7e-05, 8e-05, 9e-05, 0.0, 0.00034, 1e-05, 0.0, 0.0, 0.00022, 0.00037, 0.00022, 0.0002, 0.00035, 0.0, 0.0, 0.0, 0.00031, 1e-05, 5e-05, 0.0, 0.00049, 8e-05, 0.0, 0.00011, 0.00012, 9e-05, 0.0, 0.00037, 0.00013, 0.0, 9e-05, 0.00035, 0.00096, 0.0004, 0.00041, 0.00046, 0.00031, 0.0, 0.0002, 0.0, 0.0001, 9e-05, 1e-05, 0.00012, 9e-05, 7e-05, 0.0, 0.00031, 0.00016, 0.00013, 0.0, 0.0, 9e-05, 4e-05, 4e-05, 0.00019, 0.0, 6e-05, 4e-05, 0.0, 7e-05, 8e-05, 0.00111, 3e-05, 0.0, 7e-05, 5e-05, 0.0, 0.00018, 0.00081, 8e-05, 6e-05, 0.00085, 0.00063, 6e-05, 8e-05, 0.0007, 0.00021, 0.00046, 0.00044, 0.00022, 0.0, 4e-05, 0.0, 0.00018, 0.00014, 0.0, 5e-05, 0.0, 0.00018, 0.0, 1e-05, 0.0, 0.0, 3e-05, 8e-05, 0.00033, 1e-05, 8e-05, 3e-05, 0.00034, 0.00165, 0.00025, 0.00028, 0.0, 0.0, 0.0, 0.00016, 0.0, 0.0, 0.00032, 0.0, 0.00031, 0.00016, 0.0, 0.0, 0.00024, 0.0, 0.0, 0.0063, 0.00014, 0.0, 0.0, 0.00011, 0.0, 0.0, 0.00065, 0.0, 0.0003, 0.00081, 0.00055, 0.0003, 0.0, 0.00064, 0.0, 0.00032, 0.00077, 0.00096, 3e-05, 0.00013, 0.0, 0.0, 4e-05, 0.0, 9e-05, 0.0, 7e-05, 4e-05, 0.0, 0.0, 5e-05, 9e-05, 1e-05, 0.00019, 0.00012, 1e-05, 9e-05, 0.0002, 0.00014, 0.00022, 0.00017, 3e-05, 0.00021, 4e-05, 0.0, 0.0, 0.00012, 0.0, 1e-05, 0.00029, 7e-05, 1e-05, 0.0, 1e-05, 6e-05, 0.0, 0.00012, 0.0, 0.0, 0.00019, 0.0, 0.0, 0.0, 0.0002, 8e-05, 0.0, 0.0002, 0.00022, 0.0, 8e-05, 0.00028, 0.00044, 0.00076, 0.00068, 0.00057, 4e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00057, 0.0001, 1e-05, 0.00017, 0.00052, 2e-05, 0.0, 0.0, 0.00043, 2e-05, 5e-05, 0.0, 0.00028, 3e-05, 2e-05, 2e-05, 0.00039, 5e-05, 0.0, 0.0, 0.00045, 0.0, 3e-05, 5e-05, 0.00019, 1e-05, 2e-05, 1e-05, 0.00039, 0.00047, 0.00345, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00188, 0.0, 6e-05, 0.0, 0.00039, 0.00047, 9e-05, 0.00164, 0.00027, 0.00039, 0.00062, 0.00091, 0.00026, 1e-05, 0.00047, 0.00206, 0.00032, 0.00056, 0.00089, 2e-05, 0.0, 0.00049, 0.0, 0.0, 0.0, 0.0, 0.00014, 0.01475, 0.0, 0.0, 0.0, 0.00483, 0.0, 0.0, 0.00489, 0.00249, 0.0, 0.0, 0.00367, 0.0, 0.00057, 0.0, 0.01256, 0.0, 0.00024, 9e-05, 0.00075, 0.0, 0.00051, 0.0, 0.0, 9e-05, 0.00024, 0.00025, 7e-05, 0.0, 7e-05, 0.0, 6e-05, 0.0, 0.0, 0.0, 0.00067, 0.00057, 0.0, 0.00027, 0.0, 0.0, 8e-05, 0.00019, 0.0, 0.0, 0.00013, 0.00023, 0.0, 0.0, 0.00013, 3e-05, 0.0, 0.00037, 0.0, 0.0, 3e-05, 0.00033, 0.0, 0.00024, 0.00029, 0.00014, 4e-05, 0.00017, 6e-05, 0.0, 2e-05, 0.0, 0.0, 9e-05, 0.00095, 1e-05, 0.00022, 0.00021, 0.00029, 0.0001, 3e-05, 0.0, 0.00034, 0.0, 0.00076, 0.00043, 0.0, 0.00017, 0.0, 0.0, 0.00034, 0.00084, 0.00034, 1e-05, 0.0, 0.00049, 0.00027, 0.00012, 0.00035, 0.00014, 0.00025, 0.0, 0.0, 0.00015, 0.0, 0.00023, 0.0, 0.0002, 9e-05, 0.0, 0.00023, 0.0, 4e-05, 0.00019, 0.0004, 8e-05, 0.00019, 4e-05, 0.00032, 0.00232, 0.00039, 0.00038, 0.0003, 8e-05, 0.0, 0.0, 0.00014, 0.00013, 0.0, 0.00013, 0.00011, 0.00019, 0.00023, 0.0, 0.00011, 0.00026, 0.00014, 0.0, 0.0, 8e-05, 0.0, 0.00053, 0.00047, 0.0, 3e-05, 0.00022, 0.0, 8e-05, 0.00086, 0.00038, 0.0, 5e-05, 9e-05, 0.00022, 0.00038, 0.00023, 0.0, 8e-05, 0.0, 0.0, 9e-05, 0.0, 1e-05, 0.00027, 0.00037, 4e-05, 0.00013, 0.00018, 0.00224, 0.00017, 0.00029, 0.0, 0.00257, 0.00017, 0.0, 0.00011, 0.00049, 0.00016, 0.0, 7e-05, 0.00076, 1e-05, 0.0, 0.0, 0.00076, 5e-05, 0.0, 2e-05, 0.00051, 0.0, 7e-05, 0.00016, 0.00034, 5e-05, 3e-05, 0.0, 0.00041, 3e-05, 5e-05, 8e-05, 0.0004, 0.00015, 0.0, 8e-05, 0.0001, 0.00026, 0.00025, 0.00054, 0.00034, 0.00025, 0.00026, 0.00038, 0.00057, 0.0027, 0.00285, 0.00046, 0.00082, 0.00106, 0.00329, 0.00019, 0.00011, 0.0, 0.0, 0.0, 0.0, 1e-05, 0.00021, 0.00404, 0.0, 0.0, 0.0002, 0.00093, 0.0001, 0.0, 0.0, 0.00067, 8e-05, 1e-05, 0.0, 0.00118, 0.0, 0.00019, 0.00027, 0.00044, 0.00053, 0.00017, 0.0, 0.0, 0.0, 0.0, 0.0, 4e-05, 0.0, 0.00014, 0.0, 0.0, 0.0, 0.0, 0.01268, 0.0, 0.0, 0.0, 0.01246, 0.0, 0.0, 0.00029, 0.00244, 0.00037, 0.00019, 0.0, 0.00062, 0.00057, 0.00023, 0.00039, 8e-05, 0.0001, 0.0, 0.00049, 0.00015, 0.0, 8e-05, 0.00184, 0.0, 0.0, 5e-05, 1e-05, 0.0003, 0.00018, 0.00036, 0.0, 0.00018, 0.0003, 0.00024, 0.0, 0.00089, 0.00082, 0.00023, 8e-05, 0.0, 0.0001, 2e-05, 6e-05, 7e-05, 0.00013, 0.00013, 0.0, 0.0, 0.0, 0.00014, 8e-05, 3e-05, 0.00046, 5e-05, 0.0, 3e-05, 0.00014, 0.00012, 0.00033, 0.00023, 0.0, 7e-05, 0.00023, 0.00018, 7e-05, 0.0, 0.00021, 0.00022, 0.00047, 0.00038, 0.00023, 0.0, 0.0, 0.0, 6e-05, 0.0, 0.0, 2e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00027, 1e-05, 0.0, 0.00043, 0.00029, 0.0, 1e-05, 0.00029, 0.00031, 8e-05, 0.0001, 0.00016, 0.00039, 0.00024, 4e-05, 8e-05, 0.0, 0.0, 0.0, 0.00051, 9e-05, 0.0, 0.00015, 0.0, 0.0, 0.0, 2e-05, 0.00011, 0.0, 0.0001, 0.00016, 0.0, 0.0, 0.00029, 1e-05, 9e-05, 0.00035, 0.00041, 9e-05, 1e-05, 0.00041, 0.0018, 0.00048, 0.00039, 0.00066, 0.00026, 1e-05, 0.0001, 0.00026, 0.0002, 0.0001, 1e-05, 0.00021, 3e-05, 0.00017, 0.00041, 2e-05, 0.00225, 0.0, 0.0, 0.00026, 0.00184, 0.00033, 0.0, 0.0, 0.00054, 0.0, 0.0001, 0.0, 0.00047, 0.0, 0.0, 0.0, 0.00035, 7e-05, 0.0001, 0.0, 0.00042, 2e-05, 0.0, 7e-05, 0.00041, 0.00043, 0.00024, 0.00022, 0.0, 0.0, 0.0, 3e-05, 3e-05, 6e-05, 6e-05, 4e-05, 0.0, 0.00016, 0.0, 0.0, 0.0, 0.00048, 2e-05, 0.0, 0.0, 7e-05, 3e-05, 0.0, 0.00027, 0.00017, 3e-05, 0.00042, 0.00026, 3e-05, 0.00017, 0.00033, 0.00029, 0.00035, 0.00027, 9e-05, 5e-05, 7e-05, 2e-05, 0.0, 0.0003, 0.0, 5e-05, 3e-05, 0.00028, 7e-05, 0.0, 0.0, 0.00079, 0.0, 0.0, 0.00025, 0.00053, 0.00016, 6e-05, 0.0, 0.00048, 0.00018, 6e-05, 9e-05, 0.00249, 0.0, 0.0, 0.0, 6e-05, 4e-05, 5e-05, 0.00196, 0.0, 1e-05, 0.0, 1e-05, 0.00014, 0.0, 0.00086, 0.0, 0.0, 0.00033, 6e-05, 0.0, 0.00059, 8e-05, 0.00023, 8e-05, 0.0004, 0.0, 8e-05, 0.00023, 0.00016, 0.00024, 0.00263, 0.00212, 0.00103, 1e-05, 0.00017, 0.0, 0.00014, 0.00024, 0.0, 0.00014, 4e-05, 0.00202, 0.00012, 1e-05, 0.0, 0.00028, 8e-05, 5e-05, 0.00029, 0.00027, 5e-05, 8e-05, 0.00028, 0.00049, 0.00046, 0.0005, 0.0001, 0.00016, 0.0, 0.0002, 0.00029, 0.0, 0.0, 0.0, 7e-05, 0.0002, 0.0, 0.00021, 0.0, 1e-05, 7e-05, 2e-05, 0.00251, 0.00216, 0.0, 2e-05, 0.0, 0.0002, 0.0, 6e-05, 3e-05, 0.0, 0.0, 7e-05, 3e-05, 1e-05, 0.0, 0.0, 0.0, 0.0, 2e-05, 0.00033, 0.00014, 0.0006, 7e-05, 0.00014, 0.00033, 0.00063, 0.00187, 0.0, 0.00013, 0.00013, 0.0, 0.0001, 0.00017, 0.0, 0.0, 0.00016, 4e-05, 8e-05, 0.0, 0.00032, 0.00035, 0.00038, 1e-05, 0.0, 0.0001, 0.0, 0.0, 0.0, 0.0005, 0.0, 3e-05, 0.0, 2e-05, 0.0, 0.0, 0.00051, 0.00017, 0.0, 0.0, 0.0, 0.00028, 0.00012, 0.00045, 0.00073, 5e-05, 0.00017, 0.00012, 0.00072, 0.00016, 0.00016, 0.00018, 0.00041, 0.00014, 0.00018, 0.00017, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00125, 0.00029, 0.00034, 0.00044, 0.0, 3e-05, 1e-05, 0.00015, 0.00011, 1e-05, 3e-05, 0.00034, 0.0, 0.00049, 0.0005, 0.00027, 0.00036, 0.00011, 1e-05, 0.0, 0.00027, 4e-05, 1e-05, 8e-05, 0.0004, 0.0, 5e-05, 1e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00144, 0.00155, 0.00106, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00166, 0.0, 0.0, 0.0, 0.0, 0.0001, 0.0, 0.00014, 0.0, 0.0, 0.00035, 0.00015, 2e-05, 0.00018, 0.00013, 0.0, 0.0, 0.0, 0.00255, 0.00012, 0.0, 0.00013, 0.0, 0.0, 4e-05, 0.00024, 0.0003, 7e-05, 0.00051, 0.00013, 7e-05, 0.0003, 0.00042, 0.0, 0.00054, 0.00049, 0.00027, 0.00032, 0.0001, 0.0, 0.00013, 0.00026, 7e-05, 0.00017, 2e-05, 0.00028, 9e-05, 0.00012, 6e-05, 0.00021, 3e-05, 0.0001, 0.00027, 0.00018, 0.0001, 3e-05, 0.00031, 0.0001, 0.00056, 0.00053, 0.0003, 0.00015, 0.00012, 5e-05, 1e-05, 5e-05, 0.0, 0.0, 0.00011, 2e-05, 0.0, 0.0001, 1e-05, 0.00011, 0.0, 0.0, 0.0, 4e-05, 0.0, 0.0, 2e-05, 0.0, 0.0, 6e-05, 7e-05, 0.0004, 0.0, 7e-05, 6e-05, 0.00037, 0.00035, 0.00037, 0.00036, 0.00012, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0023, 0.00032, 0.00054, 0.00025, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.10149, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.4483, 0.0, 0.0, 0.0, 0.0, 0.47032, 0.0, 0.0, 0.47032, 2.16274, 0.10149, 0.0, 3.77113, 0.0, 0.0, 0.0, 0.64371, 0.75454, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.1894, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.18944, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.18944, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00051, 0.0, 0.0, 0.0, 0.0004, 0.0, 0.0, 0.0, 0.00054, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.66515, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.31179, 0.01541, 0.0, 0.0, 0.0, 0.17108, 0.0, 0.01541, 0.0, 0.0, 0.0, 0.00139, 0.0, 0.17108, 0.0, 0.56192, 0.02113, 0.00549, 0.00482, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00458, 0.0, 0.0, 0.0, 0.01005, 0.0, 0.0, 0.0, 0.01365, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 1e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 4e-05, 6e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00055, 0.00058, 0.00045, 0.00055, 0.00046, 0.00045, 0.00058, 0.00046, 0.00136, 0.0, 0.0, 0.00066, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.33286, 0.0, 0.0, 0.83482, 0.0, 0.22169, 0.0, 0.02988, 0.4062, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.12134, 0.0, 0.0, 0.12134, 0.0, 0.0, 0.50531, 0.60898, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.69564, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00126, 0.0, 0.0, 0.0, 0.00012, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.58807, 0.0, 0.0, 0.0, 0.56902, 0.0005, 0.0, 0.0, 0.00033, 0.00057, 0.0, 0.0, 0.0002, 0.00056, 0.0, 0.0, 0.00024, 0.0007, 0.00116, 0.0005, 7e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00141, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00032, 0.0, 0.0, 0.0, 0.00041, 0.0, 7e-05, 0.0, 0.00094, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00279, 0.0, 0.0, 0.0, 0.00047, 0.00071, 9e-05, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 2.11505, 0.0, 0.57436, 0.0, 0.0, 0.0, 0.0, 0.0, 2.02428, 0.0, 0.43805, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.4321, 0.0, 0.0, 0.38338, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.13446, 0.0, 0.0, 0.0, 0.13441, 0.0, 0.0, 0.0, 0.24681, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.04903, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00044, 0.0, 0.0, 0.0, 0.00037, 0.0, 0.0, 0.0, 0.0008, 0.0, 0.0, 0.0, 0.002, 0.0, 0.00021, 0.0023, 0.0015, 0.00021, 0.0, 0.00191, 0.01185, 0.00044, 0.00037, 0.00069, 7e-05, 0.00022, 0.00022, 0.00015, 0.0, 0.0, 0.0, 0.0, 0.00018, 0.00024, 0.00013, 0.0003, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.0, 0.00041, 0.0])))]},

'version': 2}

The PubResult object has additional resilience metadata about the learned noise models used in mitigation.

# Print learned layer noise metadata

for field, value in pub_result.metadata["resilience"]["layer_noise"].items():

print(f"{field}: {value}")Output:

noise_overhead: Infinity

total_mitigated_layers: 18

unique_mitigated_layers: 3

unique_mitigated_layers_noise_overhead: [1.4100369479435003e+44, 3.407263868699073e+112, 3.500660129782563e+37]

# Exact data computed using the methods described in the original reference

# Y. Kim et al. "Evidence for the utility of quantum computing before fault tolerance" (Nature 618, 500–505 (2023))

# Directly used here for brevity

exact_data = np.array(

[

1,

0.9899,

0.9531,

0.8809,

0.7536,

0.5677,

0.3545,

0.1607,

0.0539,

0.0103,

0.0012,

0.0,

]

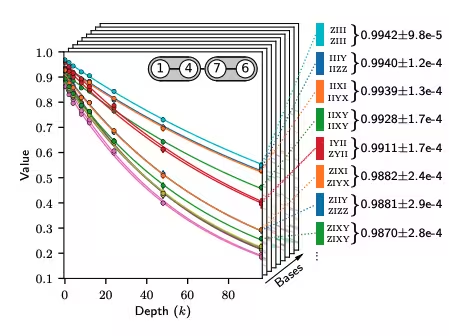

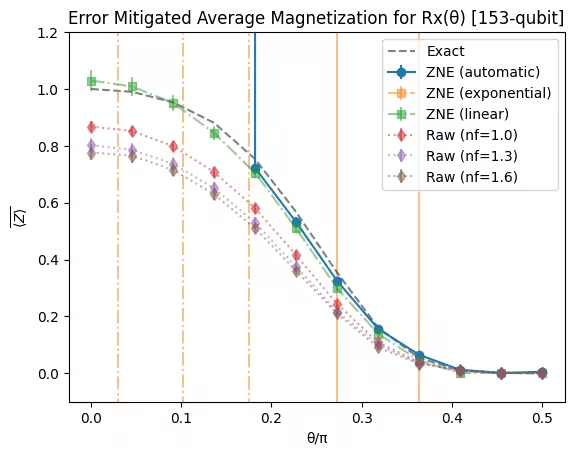

)Plot Trotter simulation results

The following code creates a plot to compare the raw and mitigated experiment results against the exact solution.

"""Result visualization functions"""

def plot_trotter_results(

pub_result: PubResult,

angles: Sequence[float],

plot_noise_factors: Sequence[float] | None = None,

plot_extrapolator: Sequence[str] | None = None,

exact: np.ndarray = None,

close: bool = True,

):

"""Plot average magnetization from ZNE result data.

Args:

pub_result: The Estimator PubResult for the PEA experiment.

angles: The Rx angle values for the experiment.

plot_raw: If provided plot the unextrapolated data for the noise factors.

plot_extrapolator: If provided plot all extrapolators, if False only plot

the Automatic method.

exact: Optional, the exact values to include in the plot. Should be a 1D

array-like where the values represent exact magnetization.

close: Close the Matplotlib figure before returning.

Returns:

The figure.

"""

data = pub_result.data

evs = data.evs

num_qubits = evs.shape[0]

num_params = evs.shape[1]

angles = np.asarray(angles).ravel()

if angles.shape != (num_params,):

raise ValueError(

f"Incorrect number of angles for input data {angles.size} != {num_params}"

)

# Take average magnetization of qubits and its standard error

x_vals = angles / np.pi

y_vals = np.mean(evs, axis=0)

y_errs = np.std(evs, axis=0) / np.sqrt(num_qubits)

fig, _ = plt.subplots(1, 1)

# Plot auto method

plt.errorbar(x_vals, y_vals, y_errs, fmt="o-", label="ZNE (automatic)")

# Plot individual extrapolator results

if plot_extrapolator:

y_vals_extrap = np.mean(data.evs_extrapolated, axis=0)

y_errs_extrap = np.std(data.evs_extrapolated, axis=0) / np.sqrt(

num_qubits

)

for i, extrap in enumerate(plot_extrapolator):

plt.errorbar(

x_vals,

y_vals_extrap[:, i, 0],

y_errs_extrap[:, i, 0],

fmt="s-.",

alpha=0.5,

label=f"ZNE ({extrap})",

)

# Plot raw results

if plot_noise_factors:

y_vals_raw = np.mean(data.evs_noise_factors, axis=0)

y_errs_raw = np.std(data.evs_noise_factors, axis=0) / np.sqrt(

num_qubits

)

for i, nf in enumerate(plot_noise_factors):

plt.errorbar(

x_vals,

y_vals_raw[:, i],

y_errs_raw[:, i],

fmt="d:",

alpha=0.5,

label=f"Raw (nf={nf:.1f})",

)

# Plot exact data

if exact is not None:

plt.plot(x_vals, exact, "--", color="black", alpha=0.5, label="Exact")

plt.ylim(-0.1, 1.2)

plt.xlabel("θ/π")

plt.ylabel(r"$\overline{\langle Z \rangle}$")

plt.legend()

plt.title(

f"Error Mitigated Average Magnetization for Rx(θ) [{num_qubits}-qubit]"

)

if close:

plt.close(fig)

return figzne_metadata = primitive_result.metadata["resilience"]["zne"]

# Plot Trotter simulation results

fig = plot_trotter_results(

pub_result,

parameter_values,

plot_extrapolator=zne_metadata["extrapolator"],

plot_noise_factors=zne_metadata["noise_factors"],

exact=exact_data,

)

display(fig)Output:

While the noisy (noise factor nf=1.0) values show high deviation from exact values, the mitigated values are close to exact values, demonstrating the utility of the PEA-based mitigation technique.

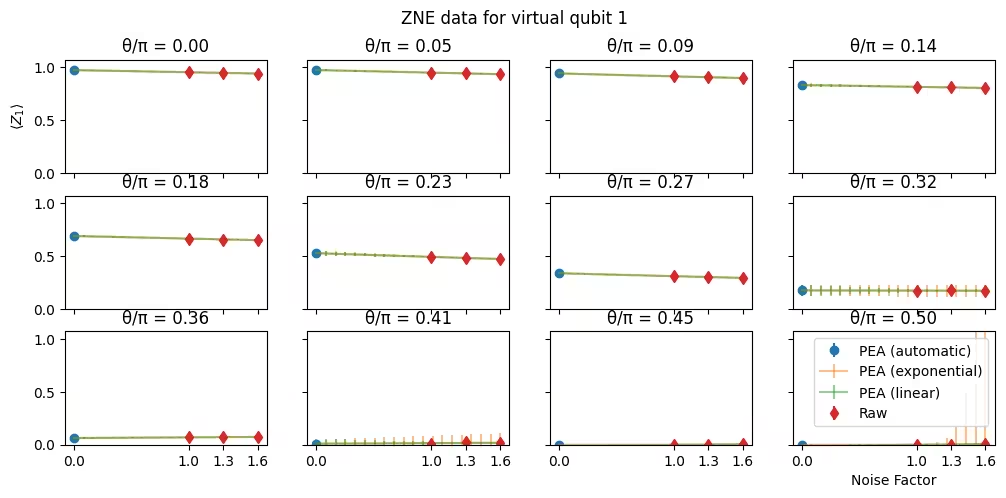

Plot extrapolation results for individual qubits

Finally, the following code creates a plot to show the extrapolation curves for different values of theta on a specific qubit.

def plot_qubit_zne_data(

pub_result: PubResult,

angles: Sequence[float],

qubit: int,

noise_factors: Sequence[float],

extrapolator: Sequence[str] | None = None,

extrapolated_noise_factors: Sequence[float] | None = None,

num_cols: int | None = None,

close: bool = True,

):

"""Plot ZNE extrapolation data for specific virtual qubit

Args:

pub_result: The Estimator PubResult for the PEA experiment.

angles: The Rx theta angles used for the experiment.

qubit: The virtual qubit index to plot.

noise_factors: the raw noise factors.

extrapolator: The extrapolator metadata for multiple extrapolators.

extrapolated_noise_factors: The noise factors used for extrapolation.

num_cols: The number of columns for the generated subplots.

close: Close the Matplotlib figure before returning.

Returns:

The Matplotlib figure.

"""

data = pub_result.data

evs_auto = data.evs[qubit]

stds_auto = data.stds[qubit]

evs_extrap = data.evs_extrapolated[qubit]

stds_extrap = data.stds_extrapolated[qubit]

evs_raw = data.evs_noise_factors[qubit]

stds_raw = data.stds_noise_factors[qubit]

num_params = evs_auto.shape[0]

angles = np.asarray(angles).ravel()

if angles.shape != (num_params,):

raise ValueError(

f"Incorrect number of angles for input data {angles.size} != {num_params}"

)

# Make a square subplot

num_cols = num_cols or int(np.ceil(np.sqrt(num_params)))

num_rows = int(np.ceil(num_params / num_cols))

fig, axes = plt.subplots(

num_rows, num_cols, sharex=True, sharey=True, figsize=(12, 5)

)

fig.suptitle(f"ZNE data for virtual qubit {qubit}")

for pidx, ax in zip(range(num_params), axes.flat):

# Plot auto extrapolated

ax.errorbar(

0,

evs_auto[pidx],

stds_auto[pidx],

fmt="o",

label="PEA (automatic)",

)

# Plot extrapolators

if (

extrapolator is not None

and extrapolated_noise_factors is not None

):

for i, method in enumerate(extrapolator):

ax.errorbar(

extrapolated_noise_factors,

evs_extrap[pidx, i],

stds_extrap[pidx, i],

fmt="-",

alpha=0.5,

label=f"PEA ({method})",

)

# Plot raw

ax.errorbar(

noise_factors, evs_raw[pidx], stds_raw[pidx], fmt="d", label="Raw"

)

ax.set_yticks([0, 0.5, 1, 1.5, 2])

ax.set_ylim(0, max(1, 1.1 * max(evs_auto)))

ax.set_xticks([0, *noise_factors])

ax.set_title(f"θ/π = {angles[pidx]/np.pi:.2f}")

if pidx == 0:

ax.set_ylabel(r"$\langle Z_{" + str(qubit) + r"} \rangle$")

if pidx == num_params - 1:

ax.set_xlabel("Noise Factor")

ax.legend()

if close:

plt.close(fig)

return figvirtual_qubit = 1

plot_qubit_zne_data(

pub_result=pub_result,

angles=parameter_values,

qubit=virtual_qubit,

noise_factors=zne_metadata["noise_factors"],

extrapolator=zne_metadata["extrapolator"],

extrapolated_noise_factors=zne_metadata["extrapolated_noise_factors"],

)Output:

Tutorial survey

Please take one minute to provide feedback on this tutorial. Your insights will help us improve our content offerings and user experience.